32 minute read · August 10, 2017

Using Pandas With Dremio For Quantitative Sports Betting

· Dremio Team

Intro

In this tutorial we’ll show you how to use Pandas with Dremio by working through a quantitative model for sports betting.

Assumptions

We assume you are familiar with Python, and we assume you have access to a Dremio instance and are familiar with the basics. If you’re just getting started with Dremio we suggest you first read Getting Oriented to Dremio and Working With Your First Dataset.

We do not assume you play or like sports, nor do we assume you gamble. 🙂

Quantitative sports betting using Dremio

As financial markets become more and more efficient, institutional investors, as well as private individuals, are searching for alternatives to increase their returns and diversify their portfolio. Over the last few years, an ever increasing number of people have started focusing on the sports betting markets as a potential source of investment/trading opportunities. With the rise of on-line sports betting websites and exchanges, the possibility of applying long established quantitative trading approaches to sports betting has become a reality.

The basis of every quantitative trading strategy, whether one decides to trade S&P 500 stocks, futures contracts or the Champions League matches is, undeniable, data. Data is needed to research, calibrate and build quantitative trading strategies which try to capture inefficiencies in the underlying markets in the trader’s benefit. Data for sports betting markets is available today from both free and proprietary sources. Unfortunately, data comes in many different “flavours” and getting it ready for the analysis one has in mind is not always a straightforward task.

In this tutorial article, our goals are:

- To show how one can use Dremio to store and access a raw dataset of recorded soccer matches

- Get this data in Python and use appropriate data cleaning and manipulation techniques to transform the data into easily manipulated timeseries

- Perform some initial basic analysis of potential betting strategies on this dataset.

Setting up the environment

In what follows, the following will be needed.

- Python version 2.7

- Pandas (a Python package) version 0.20.1

- Numpy (a python package) version 1.11.3

- PyODBC (a Python package) version 4.0.16

- Dremio’s ODBC driver (specific to your operating system)

It will also be assumed that we have access to a Dremio instance where the appropriate data sources containing the recorded soccer matched betting data is stored.

Setting up Python and required packages

If Python 2.7 is not already installed, then please see the instructions for both Windows, Linux and OS platforms here.

Bear in mind that it is not required for this article to use the Anaconda distribution, any Python 2.7 distribution will suffice. However, Anaconda does come with most of the statistical, scientific packages already installed, so it is easier to just start the number crunching.

If you use Anaconda, Pandas should already be installed. To see which version is currently installed

import pandas as pd pd.__version__ >> u'0.20.1' import numpy as np np.__version__ >> '1.12.1'

In any case, the latest available Pandas and Numpy version for your distribution can be installed as usual by going into the command prompt/terminal and typing

> pip install pandas (or numpy)

In the highly unlikely case that you run into problems, follow the detailed instructions here: https://pandas.pydata.org/pandas-docs/stable/install.html https://scipy.org/install.html

Similarly, PyODBC, a Python package to allow us to use ODBC drivers will be required. To see if PyODBC is already installed, try importing it.

import pyodbc pyodbc.version >> '4.0.16'

If importing the package fails, it can be installed using pip

> pip install pyodbc

For more detailed instructions, please see this note from Microsoft.

Setting up Dremio Connector

Here are detailed instructions on setting up Dremio’s ODBC driver for Windows, Dremio Connector.

ODBC drivers for other platforms can be found here.

Now that everything has been set up, we can test is everything is up and running by executing the following piece of code.

import pyodbc

import pandas as pd

# Setup the ODBC connection to the Dremio instance

user_id = 'george'

user_password = 'dem0passwd'

cnxn = pyodbc.connect(r"DSN=Dremio Connector;UID={user};PWD={pwd}".format(user=user_id, pwd=user_password), autocommit=True)

# SQL style query to retrive the required data from Dremio

sql = r'SELECT "Date", "Time" FROM "@george".some_game'

# Executing the query using the alredy open ODBC connection

test_data = pd.read_sql(sql, cnxn)

# Disaplying the top 5 rows from the retrieved dataset

test_data.head()Which should return:

| Date | Time | |

|---|---|---|

| 0 | 2016/01/07 | 15:05:00:161 |

| 1 | 2016/01/07 | 15:05:00:161 |

| 2 | 2016/01/07 | 15:05:00:161 |

| 3 | 2016/01/07 | 15:05:03:228 |

| 4 | 2016/01/07 | 15:05:03:228 |

The raw data

We will assume that the raw data for this example are recorded in .csv files. Each .csv file contains the recorded data for a single football match. For each match observations of the various bets odds have been recorded at random times during the match. Although the recording frequency is not constant, one can expect to find a observations every few seconds. For each one of the bets and for a given time-snapshot multiple odds may have been recorded; these odds reflect the depth of the given bet/market, i.e. a player can bet up to a certain amount at odds 22, then an extra amount at odds 1.981.98 (worse than 22), etc. In addition to the odds, the amount that is available to bet at each odds offered is also recorded. For the purpose of this article the liquidity of the market will not be taken into consideration. In total over 15 different markets and the respective liquidity are included in the raw files, but we will concentrate on only one of them in this tutorial.

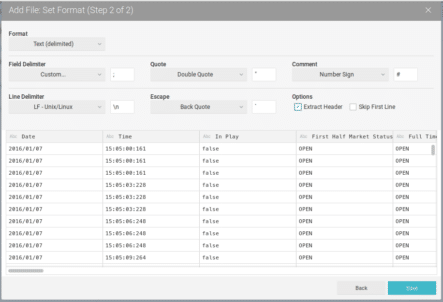

Setting up a virtual dataset

Using Dremio’s import functionality it is easy to import these files and create “physical datasets” containing all the information in each of the files. An example can be seen in the screenshot taken below.

However, the original datafiles contain over 3030 columns, which are not all needed for our analysis. In addition, some of the columns headers contain characters which may cause issue in some databases. Finally, we can directly concatenate the “Date” and “Time” columsn to create a new “Datetime” columns to use in what follows. Therefore, we will create a virtual dataset containing only the information that is really required with some manipulation of the header names and columns. To create this virtual dataset in Dremio is very easy and all that is needed is a simple SQL query.

This virtual dataset can then be saved under a Space named “Trading” for future use.

Cleaning up and formatting the raw data

The first step towards any quantitative trading strategy is to clean and process the raw, recorded data so that there is a timeseries of prices or odds with which we are comfortable to work. In this article we will be working with soccer matches betting data. Thus, our goal in this section is to create a timeseries of decimal odds for the betting market of interest, uniformly sampled at a period of one second. A soccer game lasts for ninety minutes plus any extra time (say 5 minutes in total). Adding an extra fifteen minutes or so of the half-time, our timeseries needs to have a duration of at least 90+5+15=110 minutes. We will add another 10 minutes for possible delays of the match and other unforeseen events, so our timeseries will need to have a duration of 120×60=7200 seconds. Assuming a fictional value of 2.02.0 for the bet odds throughout the game, the final output per match should look like this:

MATCH_DURATION_SECS = 120 * 60 game_example_df = pd.Series(2.0, name='Example Match', index=range(MATCH_DURATION_SECS)) game_example_df.head()

The index of the Pandas series is an integer which indicates the number of seconds since the beginning of the match, while the values correspond to the numerical value of the bet odds during that second. The name of the series will be some generic indicator of the match this timeseries corresponds to.

Decimal odds and implied probabilities

At this point, let us remind ourselves what decimal odds are. When for a given bet the decimal odds are equal to p, then it means that for every dollar one wages in favour of this bet, there is going to be a profit of (p−l) dollars if the outcome is favourable. In case of an unfavourable outcome, the loss is, of course, $l.

Decimal odds are related to the “implied probability” P of an event taking place in a very straightforward manner. Assuming the odds are fair, this means that if the player could place the exact same bet a very (very) large number of times, the player should have no profit nor loss. If W is the number of winning bets and L is the number of losing bets, then the total profit and loss (PnL) of the player will be

Of course, if the odds are fair, PnL should be $0$0, which means that

But W/(W+L) is the ratio of wins over the total number of bets, that is the probability that this bet will be profitable. A final note that we will be making use of in what follows is the representation of certain and impossible events. If we know that a bet has already won, then the probability of it winning is obviously 100. This corresponds to odds of l. Odds of l imply that there will be no profit, which is to be expected since the bet has already a known outcome. If, on the other hand, we know that the bet has already been unsuccessful, then the probability of winning is 0%. This corresponds to infinite odds, p=+∞. In a computer simulation infinity is not really an option, but a very, very big value for the odds will do the trick.

Processing the raw data

The raw data consist of rows which contain the timestamp when the observation was taken, information about the status of the match at that timestamp and, of course, the odds for various bets. For this article, we will be focusing on the bet/market where players bet on whether more or less than 2.5 goals will be scored during the match. This is the so-called “Over/Under 2.5 Goals” market. Therefore, the columns of interest to us are the following.

TAG_GOALS_SCORED = 'Goals_Scored' TAG_IN_PLAY = 'In Play' TAG_OVER_ODDS = 'Over_Odds' TAG_UNDER_ODDS = 'Under_Odds' TAG_DATETIME = 'Datetime' TAG_DATETIME_SEC = 'Datetime in seconds' # SQL style query to retrive the required data from Dremio sql = r'SELECT * FROM "Trading".some_game' # Executing the query using the alredy open ODBC connection dataset = pd.read_sql(sql, cnxn) d = dataset.loc[:, [TAG_DATETIME, TAG_IN_PLAY, TAG_OVER_ODDS, TAG_UNDER_ODDS, TAG_GOALS_SCORED]].copy() d.head()

Initially we need to transform the date and time information to number of seconds since the kick-off of the match. There are some subtleties in this procedure which need to be taken into account. As this is not the focus of this article, the details are given in the comments in the implementation given below.

# Merging the date and time columns to create a datetime object. d[TAG_DATETIME] = pd.to_datetime(d[TAG_DATETIME], format='%Y/%m/%d %H:%M:%S:%f', errors='coerce') # Sorting the data so by Datetime d = d.sort_values(TAG_DATETIME) # The In Play column is TRUE when the game is under way. We need to determine when this took place. # This can be achieved by looking at the first occurrence of the TRUE value in the In Play column. ix = d.index[(d[TAG_IN_PLAY] == 'true')][0] # Creating a time-series of starting times so that we can calulcate the difference in seconds. cc = pd.Series(d.loc[ix, TAG_DATETIME], index=d.index) cc.head() # Calculating time difference from the start of the game in seconds. Please note that if the time difference # in seconds is fractional, we are using the next whole second as a tag to avoid looking into the future. d.loc[:, TAG_DATETIME_SEC] = np.ceil((d[TAG_DATETIME].subtract(cc)).dt.total_seconds()) # Caveat: If there are two observations within the same second, then these will be mapped to the same integer second. # For example if one observation is take at 100.4 seconds after the start of the game and one is taken at 100.8, both # will be mapped to second 101. We need to keep only the last of these two, as this is the latest available information to # us before the start of second 101. # To do that we also calculate the fractional number of seconds from the beginning of the game. d.loc[:, TAG_DATETIME] = (d[TAG_DATETIME].subtract(cc)).dt.total_seconds() # The we group the observations by the integer seconds in column TAG_DATETIME_SEC. And keep only the largest of # the fractional seconds being mapped to the same integer second. times_mapping = d.loc[d[TAG_DATETIME_SEC]>=0, [TAG_DATETIME_SEC, TAG_DATETIME]].groupby(by=TAG_DATETIME_SEC).max() times_mapping = times_mapping.reset_index() # All we need to do now is a left join of the original data on the times_mapping helper table. d = pd.merge(left=times_mapping, right=d, on=[TAG_DATETIME_SEC, TAG_DATETIME], how='left') # Removing the Datetime column as it is no longer needed d = d.drop([TAG_DATETIME], axis=1) d.head(10)

The next step of the cleaning process is to make sure that the odds are consistent with the known number of goals scored so far. As there can be delays in capturing or processing the data, sometimes we seem to be able to bet on this market, although already more than two goals have been scored. For this reason, we replace all odds after a third goals is scored with appropriate values.

# Converting numeric columns to floating numbers

d[TAG_GOALS_SCORED] = pd.to_numeric(d[TAG_GOALS_SCORED], errors='coerce')

d[TAG_OVER_ODDS] = pd.to_numeric(d[TAG_OVER_ODDS], errors='coerce')

d[TAG_UNDER_ODDS] = pd.to_numeric(d[TAG_UNDER_ODDS], errors='coerce')

# Note MAX_ODDS is the really, really big value representing infinity.

MIN_ODDS = 1.0

MAX_ODDS = 1e6

# If there are any entries where the number of goals is over 2, make sure the Under odds are infinite and

# the over odds are 1.

fOver = d[TAG_GOALS_SCORED] > 2

if any(fOver):

d.loc[fOver, TAG_UNDER_ODDS] = MAX_ODDS

d.loc[fOver, TAG_OVER_ODDS] = MIN_ODDSWhen for any reason it was not possible to record the observations, a generic value of −1 was inserted into the raw data file. All of these entries are replaced with NaNs.

d.loc[d[TAG_UNDER_ODDS] == -1, TAG_UNDER_ODDS] = np.nan d.loc[d[TAG_OVER_ODDS] == -1, TAG_OVER_ODDS] = np.nan

At any given time, there might be more than one odds offered either for the “Under” or the “Over” bet. If this is the case, only th best possible odds are kept for the analysis. Since these are time-stamped with the same second, filtering the best odds is straighforward.

# Grouping by the second and keeping only the maximum odds. Note that all other fields have the same values since the # various odds were recorded at the exactly same point in time. d = d.groupby(TAG_DATETIME_SEC)[TAG_IN_PLAY, TAG_UNDER_ODDS, TAG_OVER_ODDS, TAG_GOALS_SCORED].max().reset_index() d.head()

| Datetime in seconds | In Play | Under_Odds | Over_Odds | Goals_Scored | |

|---|---|---|---|---|---|

| 0 | 0.0 | true | 1.4 | 1.04 | 0 |

| 1 | 4.0 | true | 1.4 | 1.04 | 0 |

| 2 | 7.0 | true | 1.4 | 1.04 | 0 |

| 3 | 10.0 | true | 1.4 | 1.04 | 0 |

| 4 | 13.0 | true | 1.4 | 1.04 | 0 |

Note that since the original recording of the data was done at irregular intervals, it is possible that our timeseries so far is not complete, in the sense that there are no observations for some seconds. For example, in the dataframe shown above, there are no observations for seconds 1 to 7. For this reason the dataframe will be reindexed and NaNs will be inserted for the missing observations.

# Use the Datetime in seconds column as the new index d = d.set_index(TAG_DATETIME_SEC) #Replace this by a series ranging from 0 to MATCH_DURATION_SECS - 1 d = d.reindex(np.arange(MATCH_DURATION_SECS)) d.shape

> (7200, 4)

d.head(10)

| Datetime in seconds | In Play | Under_Odds | Over_Odds | Goals_Scored |

|---|---|---|---|---|

| 0 | true | 1.4 | 1.04 | 0.0 |

| 1 | NaN | NaN | NaN | NaN |

| 2 | NaN | NaN | NaN | NaN |

| 3 | NaN | NaN | NaN | NaN |

| 4 | true | 1.4 | 1.04 | 0 |

| 5 | NaN | NaN | NaN | NaN |

| 6 | NaN | NaN | NaN | NaN |

| 7 | true | 1.4 | 1.04 | 0 |

| 8 | NaN | NaN | NaN | NaN |

| 9 | NaN | NaN | NaN | NaN |

The aforementioned procedure has been applied to a single datafile stored in Dremio, which contains the raw recorded data for a single game. The exact same procedure can be applied to all of the files so that a clean timeseries of the odds of interest is created for each game. In what follows, we will use these clean timeseries for 1000 different games to try and explore the various possibilities of developing a quantitative betting strategy for soccer matches.

Analysing the Over/Under bet odds

Based on the procedure described in the previous section, three new tables have been created and stored in Dremio.

Odds_Under is a table containing the clean timeseries of the odds in favour of less than 2.5 goals per match. Odds_Over is, similarly, a table containing the odds in favour of a bet that over 2.5 goals will be scored in a match. Final, Goals_Scored is a table containing a timeseries of the goals scored up to that second in each match. To load these datasets from Dremio is again straightforward.

# Getting the odds for the Under 2.5 goals per game

sql = r'SELECT * FROM "@george".Odds_Under'

# Executing the query using the alredy open ODBC connection

odds_under = pd.read_sql(sql, cnxn)

odds_under = odds_under.set_index('Second')

# Converting odds to numeric fields

for c in odds_under:

odds_under[c] = pd.to_numeric(odds_under[c], errors='coerce')

odds_under.iloc[0:5, 0:5]| Second | Game_0 | Game_1 | Game_2 | Game_3 | Game_4 |

|---|---|---|---|---|---|

| 0 | 1.47 | 1.81 | 1.44 | 1.53 | 1.63 |

| 1 | 1.47 | NaN | 1.44 | 1.53 | NaN |

| 2 | NaN | NaN | 1.44 | 1.53 | NaN |

| 3 | NaN | NaN | 1.44 | 1.53 | NaN |

| 4 | NaN | NaN | NaN | 1.53 | 1.63 |

# Getting the odds for the Over 2.5 goals per game

sql = r'SELECT * FROM "@george".Odds_Over'

# Executing the query using the alredy open ODBC connection

odds_over = pd.read_sql(sql, cnxn)

odds_over = odds_over.set_index('Second')

# Getting the goals scored up to each point in time in each game

sql = r'SELECT * FROM "@george".Goals_Scored'

# Executing the queries using the alredy open ODBC connection

goals_scored = pd.read_sql(sql, cnxn)

goals_scored = goals_scored.set_index('Second')

# Converting odds to numeric

for c in odds_over:

odds_over[c] = pd.to_numeric(odds_over[c], errors='coerce')

for c in goals_scored:

goals_scored[c] = pd.to_numeric(goals_scored[c], errors='coerce')The missing values for different periods in each game are prohibitive for our analysis. For this reason, the missing values will be filled with the latest recorded value. Using a previous recorded value ensures we are not using potential information from the future. However, it is very important to remember that there have been seconds in each game for which no actual information was available. When backtesting a potential trading strategy, extreme care should be taken not to assume that one could have traded during those seconds.

# Filling forward. After filling forward, we are filling backwards to eliminate the few missing observations at the start of the match df_under = odds_under.fillna(method='ffill').fillna(method='bfill') df_over = odds_over.fillna(method='ffill').fillna(method='bfill') df_goals = goals_scored.fillna(method='ffill').fillna(method='bfill') # At this point there should be no missings in our data assert pd.isnull(df_under).any().any()==False, 'There are still missing in the under odds...' assert pd.isnull(df_over).any().any()==False, 'There are still missing in the over odds...' assert pd.isnull(df_goals).any().any()==False, 'There are still missing in the goals scored...'

Average over/under probabilities

Remember that the implied probability of a bet being successful is equal to the inverse of the odds offered. Therefore the implied probabilities of the under/over bets can be calculated using the odds and then these can be averaged across time.

Why are implied probabilities useful? Odds are real numbers between 1 and +∞. Therefore it is not easy to see how these can be averaged to see if there is any large scale inefficiency in our data. Implied probabilities, however are real numbers between 0 and 1 inclusive. Therefore, these can be easily averaged to give a meaningful number.

implied_under_probs = 1 / df_under

implied_over_probs = 1 / df_over

import matplotlib.pyplot as plt

%matplotlib inline

fig = plt.figure(figsize=(9,6))

ax = fig.add_subplot(1,1,1)

ax.plot(implied_under_probs.index, implied_under_probs.mean(axis=1), label='Under')

ax.plot(implied_over_probs.index, implied_over_probs.mean(axis=1), label='Over')

ax.set_xlabel('Seconds')

ax.set_ylabel('Implied probability')

ax.legend(loc='best')

ax.grid()

How can we interpret this figure? To answer this question, first we need to go through some basic bet trading concepts. Our goal is simple: make profit. How do we accomplish this? Traditionally, one way to make a profit through betting was to be right. We place a (say) $1 bet at time t and odds p(t) and, if we are right, we make a profit of p(t)−l dollars. However, nowdays, many sports betting platforms allow for “caching out early”. What this really is, is accepting a bet from someone for the same outcome. Let us give an example.

Assume at time t1 the score on a given match is 0−0 and we place a $1 bet on the Over 2.5 goals per game at odds p(t1)=3. At some future point in time t2 > t1, the score is 1−0 and the odds for the Over 2.5 goals bet are now p(t2)=1.5. Assume that we accept a $2 bet from someone waging that Over 2.5 goals will be scored. Let us consider all possible outcomes.

If more than 2.5 goals are scored, our original bet is successful and thus we make a profit of $3−$1=$2. The second bet we accepted was also successful, so we have to pay

$2×(p(t2 -1)=$1. Thus, our total profit is $2−$1=$1.

What happens if less than two goals are scored? Well our original bet was a losing one and we lose $1. From the second bet, however, we make $2. So our total profit is again −$1+$2=$1. This example can easily be generalised to show that if the odds change, then our profit (or loss) per dollar of the original bet is equal to

where p1 = p(t1) is the odds at which the bet was originally placed and p2 = p(t2) is the odds when we decided to “close” the bet. Note, that if p2 > p1, we are actually making a loss. In the extreme case where the bet is lost, p2=+∞ so that

that is we lose the entire amount of the original bet.

To recap, if we decide to close the bet when p1 > p2 then we make a profit. If we close the ber when p1 < p2, we make a loss. However, remember that the implied probability P to be successful is the inverse of the odds. Therefore

which means that the trade (opening and then closing a bet) is profitable if and only if the implied probability is higher when the bet is closed. Note that a higher probability means that it is now more probable that the bet will be successful.

So, in light of all of this, how can we interpret the figure. Remember that what has been plotted is the average implied probability for both the Over and Under 2.5 goals bets across roughly 1000 soccer matches. We are looking to see, of on average, there is some period in the match where any of these probabilities increase. This would indicate that perhaps there is some inefficiency in pricing the actual probability of the event which we could take advantage of to make a profit. How would we do that?

Let us focus on the implied probability for the Over bet which seems to be increasing from about 50% to almost 70% towards the end of the match. This behaviour seems to be suggesting a potential betting strategy. During the 85th minute of the game that is second 6000=(45+15+40)×60, bet on the Over 2.5 goals bet. On average, the implied probability will increase, which means that, on average, the odds will decrease over the next few minutes of the game. Then close the bet to make a profit, always on average.

Is this real? Or is it an artifact of our data/cleaning procedure? This is not an easy question to answer. Our data gathering process is certainly not perfect. We know that there gaps in our data and we have filled them using the latest available data point. Maybe we could not have been able to trade during the 85-th minute on every single game. Or maybe the commission charged by the betting companies mitigate any edge we have found. To answer these questions access so more detailed data is required, which is unfortunately not freely available.

Summary

The goal of this article has been to show how we can leverage Dremio to facilitate the analysis of sports betting data to design a quantitative trading strategy.

Using Dremio has facilitated this analysis in two key ways. First, it is very convenient to import the raw .csv datafiles. By importing the datafiles into Dremio, we were able to convert them effortlessly into virtual datasets and then access and modify them using simple SQL commands.

Secondly, the “clean” odds datasets may contain thousands of timeseries at a granularity of several observations per second. The more the games recorded and the higher the frequency, the more accurate our simulation results will be. Dremio makes querying such big datasets much more efficient.

Sign up for AI Ready Data content