18 minute read · September 3, 2017

Handling Data Variety in the Data Lake

Intro

Telling a story with data usually involves integrating data from multiple sources. In this tutorial we will show how Dremio can be used to join data from JSON in Amazon S3 with other sources in the data lake to help derive further insights into the incident data from the city of San Francisco. The idea is that you can use Dremio to join data from other sources that have not yet been moved to the data lake, and query it all from your favorite tools. In this tutorial we will build on the work we have already done with Tableau in previous tutorials.

Prerequisites

To follow this tutorial you should have access to a Dremio deployment, and you should have completed the first three tutorials - Getting Oriented to Dremio, Working With Your First Dataset, and Visualizing Your First Dataset With Tableau. You also need access to Tableau. If you don’t have Tableau a free trial is available at www.tableau.com.

The Data

Having zip codes for the San Francisco Police Incidents data would open up a number of interesting reference datasets, such as demographic data. Unfortunately, while the incidents include address and coordinate data, they don’t include zip code. In this tutorial we are going to join the incidents to a lookup table that includes zip code info for each of the coordinates. Once we have zip codes, we will also join demographic data available from the US Census. First let’s remind ourselves of the schema for the incidents data:

| Field | Type | Example |

| IncidentNum | String | 170512983 |

| Category | String | VEHICLE THEFT |

| Descript | String | STOLEN AUTOMOBILE |

| DayofWeek | String | Saturday |

| Date | String | 06/24/2017 |

| Time | String | 00:30 |

| PdDistrict | String | SOUTHERN |

| Resolution | String | NONE |

| Address | String | 9TH ST / MISSION ST |

| X | String | -122.414714295579 |

| Y | String | 37.7762310404758 |

| Location | String | (37.7762310404758°, -122.414714295579°) |

| PdId | Integer | 17051298307021 |

To generate zip codes, we’ll need to use a reverse geocode service. There are a number of options available, and for this tutorial we used the Google Maps API. You can pass in coordinates, and the service will return the full address, including the zip code.

Here’s one way to do it, using Pandas. This code gets the distinct latitude+longitude pairs from Dremio via ODBC, then calls the Google Maps API, then saves the results to a csv file:

import googlemaps

from pygeolib import GeocoderError, GeocoderResult

import pyodbc

import pandas as pd

import numpy as np

import sys

import pyarrow as pa

import pyarrow.parquet as pq

gmaps = googlemaps.Client(key='your-key')

host = 'localhost'

port = 31010

uid = 'my-u'

pwd = 'my-pwd'

cnxn = pyodbc.connect("DRIVER=DREMIO ODBC DRIVER;ConnectionType=Direct;HOST={};PORT={};AuthenticationType=Plain;UID={};PWD={}".format(host,port,uid,pwd),autocommit=True)

sql = '''SELECT DISTINCT Lon,Lat FROM SFIncidents.incidents'''

df = pd.read_sql(sql,cnxn)

for index, row in df.iterrows():

try:

full_result = gmaps.reverse_geocode((row['Lat'],row['Lon']),result_type="postal_code")

zip_result = GeocoderResult(full_result).postal_code

print zip_result

df.set_value(index, 'zip', zip_result)

except:

e = sys.exc_info()

print e

df.to_csv('/zip_lookup.csv',index_label="id")The results are provided in the S3 bucket as zip_lookup.csv.

This is a simple table with four columns:

| Field | Type | Example |

| id | String | 0 |

| Lon | String | -122.40340479 |

| Lat | String | 37.7754207 |

| Zip | String | 94103 |

Let’s start by identifying this file to Dremio. Go into the Samples data source to locate zip_lookup.csv. Hover over this file to see the new dataset icon:

After you click the new dataset button, you’ll be asked to configure this CSV file:

Make sure you specify the correct line delimiter, which is OS dependent and should correspond to the environment in which the file was created, which is Unix/Linux in this case. Also, check the “Extract Field Names” box to assign column names from the first row in this file. Then click “Save.”

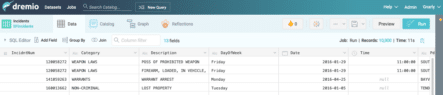

Now you will see this physical dataset in Dremio’s dataset viewer:

We know the data types for some of these fields aren’t strings, so let’s change them to the appropriate types. Lon and Lat should be floats. For each column, click the data type menu with the letters “Abc” on the left of the column header, then select the appropriate type:

For both columns, use the same name for the new column, and check the box to delete the original column, effectively replacing the old columns with the new ones. Select the option to “Replace values with null” instead of “Delete records.”

When making these changes we are not altering the source data. Instead, we are defining a virtual dataset that will apply these changes to source data dynamically.

Now, we aren’t going to need the id column, so let’s remove it from our virtual dataset. Click the arrow on the right of the column header to access the data transformation menu and select “Drop”:

There are lots of options on this menu, and they are data type dependent. Your virtual dataset should no longer have the id column, and you should have three columns total. You might be tempted to change zip to an integer, which would be ok for locations in San Francisco, but there are plenty of zip codes in New England that begin with 0, so the real type for zip codes should always be string:

You’re now ready to save this virtual dataset. Click “Save As” at the top, and call this virtual dataset “zip_lookup” in your “SFIncidents” space:

Building The Joins

Now we’re ready to join zip_lookup to our incidents virtual dataset. Let’s start by going back to our SFIncidents space. Now we should see two datasets:Next, open the incidents dataset, which will take you to the dataset viewer:Just above the table of data there’s a “Join” button with two intersecting circles. Click on this button to build a join to the zip_lookup table:A preview of our incident data is provided on the bottom left. Above, we have a list of all our data sources, including our home space, public spaces, and sources. Open the SFincidents space and select zip_lookup. Data from this source will now be previewed in the bottom right: Click “Next” to create the join between these sources. Drag the Lon and Lat from both datasets into the middle area to create the join, then click “Preview” to test your join. What you see might not be what you expected: What do all the question marks mean? If you scroll to the right in the join preview, you’ll see the Lat and Lon columns from both tables side by side.

Now we’re ready to join zip_lookup to our incidents virtual dataset. Let’s start by going back to our SFIncidents space. Now we should see two datasets:

Next, open the incidents dataset, which will take you to the dataset viewer:

Just above the table of data there’s a “Join” button with two intersecting circles. Click on this button to build a join to the zip_lookup table:

A preview of our incident data is provided on the bottom left. Above, we have a list of all our data sources, including our home space, public spaces, and sources. Open the SFincidents space and select zip_lookup. Data from this source will now be previewed in the bottom right:

Click “Next” to create the join between these sources. Drag the Lon and Lat from both datasets into the middle area to create the join, then click “Preview” to test your join. What you see might not be what you expected:

What do all the question marks mean? If you scroll to the right in the join preview, you’ll see the Lat and Lon columns from both tables side by side.

Refining Float Precision

The question marks for the first two Lon and Lat columns from incidents mean that there’s no match for the Lon and Lat values from zip_lookup. Why not? It turns out the precision of the floats is different. The data from the city of San Francisco has floats with precision out to 16 decimal places for some locations, whereas the values in our lookup table have only 8 decimal places.

Fortunately, there’s an easy way to adjust the Lon and Lat values in our virtual dataset. Let’s cancel out of the join creation steps, which will take us back to the dataset viewer. Then click on the data transformation menu for the Lon column and select “Calculated Field”:

Now you’ll see the column name on the upper right, and a list of functions on the right. Type TRUNCATE(“Lon”,8) into the box on the left:

Name the new field Lon and check the box to “Drop the Source Field.” Click Apply which will return you to your virtual dataset. Perform the same steps for the Lat column. Your virtual dataset now has Lon and Lat values with precision out to 8 decimal places.

Before you worry that the location data is no longer valid, it’s worth considering what this precision corresponds to in terms of coordinate data. 6 decimal places is enough to identify individual humans, and 7 decimal places is the limit of commercial surveying equipment. See the Wikipedia page on Decimal Degrees to learn more. The additional precision included in these coordinates is superfluous and can be ignored.

Using A Left Outer Join

There are several ways we could combine the data from the zip lookup table with the source data. In the video of this tutorial we show one way - editing the definition of the virtual dataset to perform an inner join on the zip_lookup virtual dataset. Another option is to create a new virtual dataset that is the result of the join with the lookup table. Both approaches are valid. The main difference is that when creating virtual datasets for each step, we are creating incremental transformations that can be used in other contexts. We’ll proceed along these lines for the rest of the tutorial.

Now let’s try building the join again. Click the join button and select the zip_lookup table, then drag Lat and Lon columns from both datasets together. Let’s also use a left outer join this time, instead of the default inner join. To make this change, select the Type dropdown and then choose Left Outer:

Now click Apply and you should see your incidents data with a new column for zip listed as the rightmost column. You can now click “Save As…” and create a new virtual dataset called “incidents_zip”:

Adding Demographic Data

Now it should be easy for us to add demographic data to these incidents. Let’s go back to Samples and navigate to the zips.json file:

Click on the new dataset button and confirm the format for this JSON file. You should now see the physical dataset in Dremio’s dataset viewer:

Now we have lookups for State, City, and Population for every zip code in the US. Of course the San Francisco data already has state and city for every incident, but there are about 50 zip codes in the city. You can also purchase more detailed demographic data that includes gender, ethnicity, income, etc.

In this dataset the zip column is called _id. Let’s rename that to zip by clicking on the name _id in the column header, typing zip, then clicking enter. We could delete the city, state, and loc columns, but those might be useful for other jobs we work on that don’t have city and state already populated, so let’s leave them for now. Save the virtual dataset as zip_demographics:

Adding A Second Join

Now we can go back to our incidents_zip virtual dataset and join it to zip_demographics:

And on the join screen, select zip from both datasets:

Click “Apply” and what you see might surprise you:

The reason it says “No Results in Preview Sample” is because this view is based on a preview of the join. The fact that there is no data isn’t totally surprising - of the 50,000+ zip codes in the US only 50 match our records from San Francisco. Clearly the sample from the zips.json file didn’t include any zip codes from San Francisco. Click Run:

Now you should see the results:

We can tidy up our virtual dataset a bit by removing some of the columns we won’t need: zip0, Lon0, Lat0 (these are all columns from joined tables that match the columns we already have), city, state, loc.

Note that each time you drop one of these columns you’ll see no results. This is because Dremio runs a preview first and a user must explicitly click “Run.” This is helpful with very large datasets where running the full query could take several minutes or longer.

In this case we could have started by joining zip_demographics with zip_lookup to create a new virtual dataset, then joined the new virtual dataset to incidents. The end result would be the same.

Now that we have all our data together, let’s click Save As and call this new virtual dataset incidents_demo. After you click Save, you’ll return to the dataset viewer and can click the Tableau button to launch Tableau connected to this dataset:

Example Visualizations

What follows are some interesting visualizations you can build with this data. First, we can compare patterns for stolen property incidents between days of the week:

Here we are visualizing incidents by zip code across all incident types.

Here’s a breakdown of type by zip code.

Assuming the number of incidents is more or less proportional to population, it might be interesting to compare “density” of events. Here we can see the 94103, 94014, 94015 are all very high in terms of incidents per population. In contrast, 94129, 94130, 94131 all look like there are far fewer incidents per population and might be the better places to live, all other factors being equal:

You’ll see these are not limited to the category VEHICLE THEFT.

Conclusion

In this tutorial we combined data from the city of San Francisco with demographic data to help us make better sense of patterns of incidents by zip code. While we visualized the results with Tableau, the work we did to join these different data sources can be used by any SQL-based tool.Being able to deal with the exciting variety of data in the data lake is essential when performing analysis. Here we worked with a few file formats of very modest size, but the power of Dremio is that these techniques could be applied to other sources.It’s also worth noting that we did this work with virtually no coding and without knowing SQL in depth.