10 minute read · August 15, 2024

Why Modernize Your Hadoop Data Lake with Dremio and MinIO?

· Principal Product Marketing Manager

Hadoop, once celebrated as a groundbreaking framework for processing massive datasets, has become increasingly burdensome for most enterprises. Despite its initial advantages, Hadoop is now recognized as slow, costly, and time-consuming to manage. While it was once cheap to provision, the tight coupling of compute and storage, combined with high maintenance costs and inefficiencies, has significantly impacted organizations' ability to provide efficient data access for end users. As newer data processing frameworks emerge, Hadoop's limitations have become more apparent, revealing the need for modernization to fully harness the potential of big data. Modernizing Hadoop not only enhances performance, reduces latency, and optimizes resource utilization but also improves security, data governance, and compliance. By moving to modern data processing frameworks and storage technologies, organizations can achieve greater agility, scalability, and efficiency, fostering a self-service analytics culture.

The Process of Modernizing Hadoop with Dremio and MinIO

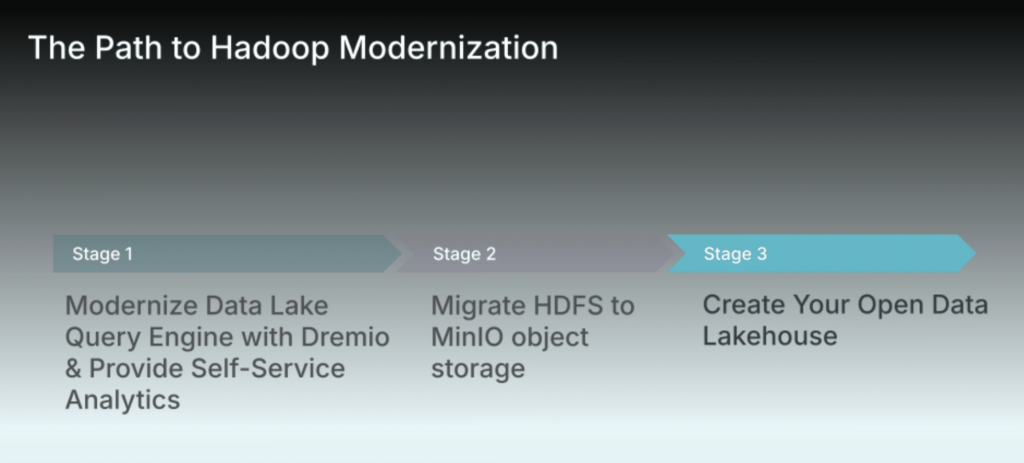

Dremio and MinIO advocate for a multi-phase approach to Hadoop modernization. Implementing a phased strategy enables organizations to better manage risks and maintain control throughout the process. By segmenting the modernization into smaller, more manageable phases, you can evaluate the outcomes of each stage, make any needed adjustments, and gradually enhance the system. This approach ensures that your modernized Hadoop infrastructure meets organizational goals with minimal disruption.

Phase 1: Modernize the Query Engine with Dremio and Provide Self-Service

The query engines included with Hadoop distributions, such as Hive, Drill, or Impala, often fall short of meeting the needs of business users and analysts. By replacing these legacy engines with Dremio, organizations can achieve significant performance gains, improving the speed and efficiency of their analytical workloads on existing Hadoop environments. Dremio not only enhances query performance—offering more than 10x improvements over Hive and Impala—but also improves manageability, governance, and ease of use, streamlining the analytical process.

Additionally, Dremio enables organizations to connect and federate queries across multiple data sources, facilitating self-service analytics. This capability democratizes data access across domains, making Dremio the unified access layer that empowers broader data-driven decision-making. A key value of providing this unified access capability in the multi-phase migration process is that Dremio can act as a bridge between the existing legacy Hadoop environment and the new modern lakehouse environment. By providing connectivity to both environments as well as a robust semantic layer, companies can ensure that users have a seamless experience for their analytical workloads as the transition occurs.

Phase 2: Migrate Data from HDFS to MinIO Object Storage

To simplify and modernize a data lake environment, moving from a tightly coupled compute and storage environment like Hadoop to one with the separation of compute and storage is critical. In this phase, existing data is moved into a new, modern MinIO S3-compatible object storage environment. The distcp (distributed copy) utility provided by Hadoop is one of the simplest mechanisms that can be used to move the data from HDFS to MinIO object storage. In moving to a modern MinIO-based S3-compatible object storage environment, companies eliminate both the high licensing costs associated with most Hadoop environments and decouple compute and storage. This allows organizations to scale storage resources separately from compute, decreasing overall infrastructure costs.

Phase 3: Create a Modern, Open Data Lakehouse

In the final phase of modernization with Dremio and MinIO, the goal is to create a modern Iceberg-based lakehouse platform. Migrate Hive tables to the new, modern open table format Apache Iceberg. There are numerous mechanisms, both in-place and restating the data, to move the data into Iceberg. Dremio provides numerous guides on how to design your approach in migrating from the Hive table format to Apache Iceberg. In moving to a modern Iceberg environment, companies can take advantage of all the robust data management and data optimization features. A modern Apache Iceberg lakehouse environment offers numerous benefits, such as enhanced performance through data pruning, support for ACID transactions, and the ability to handle schema and partition evolution. Additionally, it enables time travel queries and is compatible with various compute engines, making it an ideal open table format for managing large-scale, complex datasets in a modern Dremio and MinIO lakehouse environment.

The Benefits of Hadoop Modernization with Dremio and MinIO

Modernizing Hadoop offers numerous benefits that can help organizations unlock the full potential of their data infrastructure. Numerous companies, such as Nomura, have undergone Hadoop modernization and moved to a Dremio/MinIO lakehouse environment. Some of the key benefits organizations have experienced are:

- Enhanced Performance: Modernizing Hadoop with Dremio and MinIO improves data processing speeds and reduces latency, enabling faster and more efficient analysis. Moving from a legacy query engine to Dremio, built on Apache Arrow and taking advantage of Apache Iceberg, allows companies to leverage all the modern capabilities that accelerate analytical workloads. With Dremio’s query acceleration technology—called Reflections—Dremio delivers sub-second, near-instantaneous BI performance. Dremio’s query also leverages Columnar Cloud Cache (C3) to selectively cache data, eliminating over 90% of I/O performance costs.

- Self-Service Analytics: Due to Hadoop's performance challenges and complexity, IT departments often restrict direct data access, offering analysts only curated datasets via a separate data warehouse or data mart. This limited access hampers business agility and makes direct self-service analytics difficult. With a Dremio and MinIO lakehouse solution, that is no longer a problem. High-performance analytics are enabled on the data in the data lake, providing users with rapid data access and analysis. At the same time, by moving to a new Dremio/MinIO environment, data users gain a robust semantic layer and a modern interface for self-service data curation and access. GenAI features such as Text to SQL, auto data tagging, and wiki generation make it easier and more intuitive for them to find, understand, and interact with the data.

- Simplified Data Management: Dremio’s robust data management capabilities and MinIO’s scalable storage simplify the management of large data volumes. Numerous traditional Hadoop lakehouse tasks are automated in a modern Dremio/MinIO lakehouse environment. Automatic optimization features include compaction, which automatically rewrites small files into larger ones to keep queries performant, and garbage collection, which automatically removes unused manifest files, manifest lists, and data files. Other tasks such as data ingestion are automated in a Dremio environment. MinIO Console presents a simple, intuitive interface for accessing even the most advanced features of the storage suite. This ensures that data is always up-to-date and readily available for analysis, reducing complexity and enhancing overall efficiency.

- Scalability: Dremio and MinIO infrastructures are designed to scale seamlessly with growing data volumes, ensuring consistent performance. With a new modern Dremio/MinIO lakehouse environment, companies can easily scale compute and storage, adjusting resources as needed. This scalability allows organizations to efficiently manage increasing data demands without compromising speed or efficiency.

- Cost Savings: With a modern Dremio/MinIO lakehouse, there is no need to duplicate data or create data extracts, reducing the overall storage cost and footprint. At the same time, the vast performance improvements mean companies can do more with less, equating to a substantially lower overall TCO than legacy Hadoop environments. The decoupling of compute and storage allows organizations to scale resources as needed, reducing overall costs. Additionally, leveraging low-cost MinIO object storage solutions decreases overall storage expenses. By reducing the need for expensive hardware upgrades and minimizing ongoing maintenance costs, the Dremio-MinIO solution provides significant cost savings.

- Business Agility and Time to Market: Migrating from Hadoop to a modern Dremio/MinIO lakehouse significantly enhances a company's business agility and time to market. With Dremio's advanced query engine and MinIO's scalable storage, companies can streamline data access and processing, enabling faster decision-making and reducing the time needed to analyze and act on data insights. This improved efficiency allows companies to respond more swiftly to market changes and customer demands. As a result, organizations experience accelerated innovation cycles and quicker product launches, giving them a competitive edge in the marketplace.

Conclusion

Modernizing a Hadoop data lake with Dremio and MinIO brings substantial advantages to organizations seeking to enhance their data infrastructure. This transformation not only resolves the performance, scalability, and cost challenges associated with traditional Hadoop environments but also empowers businesses to achieve greater agility and efficiency. By leveraging Dremio's advanced analytics capabilities and MinIO's scalable storage, companies can modernize their data lakes to meet the demands of today's fast-paced, data-driven world. The result is a robust, flexible, and cost-effective data environment that accelerates time to market and drives business innovation.

Get hands-on with Dremio or contact us today to set up a meeting about modernizing your Hadoop Data lake. Also, check out our Hadoop Migration Guide.