As the name suggests, a data lakehouse architecture combines a data lake and a data warehouse. Although it is not just a mere integration between the two, the idea is to bring the best out of the two architectures: the reliable transactions of a data warehouse and the scalability and low cost of a data lake.

Over the last decade, businesses have been heavily investing in their data strategy to be able to deduce relevant insights and use them for critical decision-making. This has helped them reduce operational costs, predict future sales, and take strategic actions.

A lakehouse is a new type of data platform architecture that:

- Provides the data management capabilities of a data warehouse and takes advantage of the scalability and agility of data lakes

- Helps reduce data duplication by serving as the single platform for all types of workloads (e.g., BI, ML)

- Is cost-efficient

- Prevents vendor lock-in and lock-out by leveraging open standards

History and Evolution of the Data Lakehouse

Data Lakehouse is a relatively new term in big data architecture and has evolved rapidly in recent years. It combines the best of both worlds: the scalability and flexibility of data lakes, and the reliability and performance of data warehouses.

Data lakes, which were first introduced in the early 2010s, provide a centralized repository for storing large amounts of raw, unstructured data. Data warehouses, on the other hand, have been around for much longer and are designed to store structured data for quick and efficient querying and analysis. However, data warehouses can be expensive and complex to set up, and they often require extensive data transformation and cleaning before data can be loaded and analyzed. Data lakehouses were created to address these challenges and provide a more cost-effective and scalable solution for big data management.

With the increasing amount of data generated by businesses and the need for fast and efficient data processing, the demand for a data lakehouse has grown considerably. As a result, many companies have adopted this new approach, which has evolved into a central repository for all types of data in an organization.

What Does a Data Lakehouse Do?

There are four key problems in the world of data architecture that data lakehouses address:

- Solves the issues related to data silos by providing a centralized repository for storing and managing large amounts of structured and unstructured data.

- Eliminates the need for complex and time-consuming data movements, reducing the latency associated with shifting data between systems.

- Enables organizations to perform fast and efficient data processing, making it possible to quickly analyze and make decisions based on the data.

- Finally, a data lakehouse provides a scalable and flexible solution for storing large amounts of data, making it possible for organizations to easily manage and access their data as their needs grow.

Data warehouses are designed to help organizations manage and analyze large volumes of structured data.

How Does a Data Lakehouse Work?

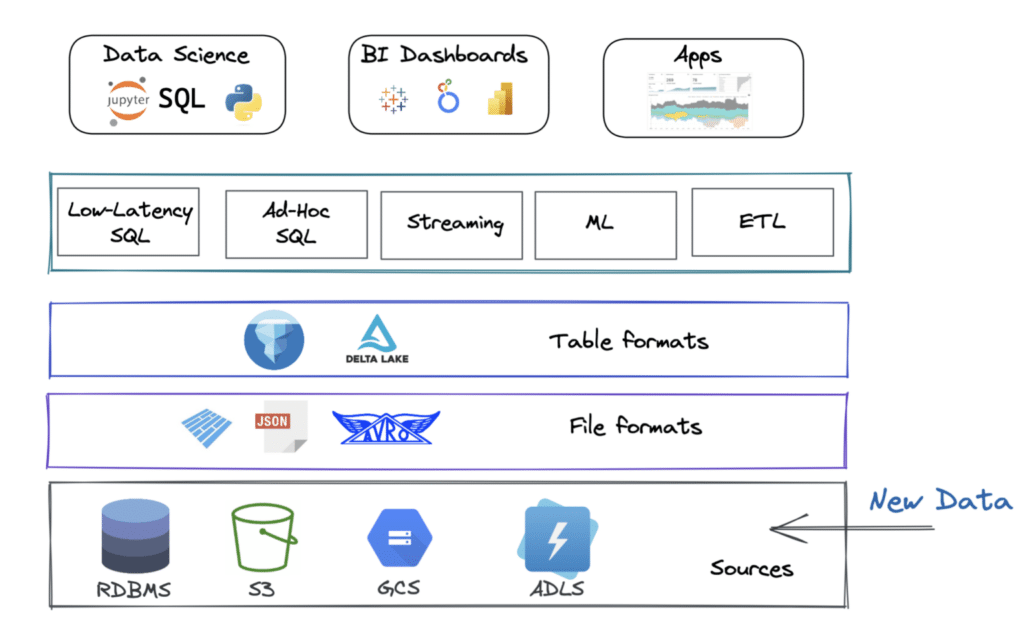

A data lakehouse operates by utilizing a multi-layer architecture that integrates the benefits of data lakes and data warehouses. It starts with ingesting large amounts of raw data, including both structured and unstructured formats, into the data lake component. This raw data is stored in its original format, allowing organizations to retain all of the information without any loss of detail.

From there, advanced data processing and transformation can occur using tools such as Apache Spark and Apache Hive. The processed data is then organized and optimized for efficient querying in the data warehouse component, where it can be easily analyzed using SQL-based tools.

The result is a centralized repository for big data management that supports fast and flexible data exploration, analysis, and reporting. The data lakehouse's scalable infrastructure and ability to handle diverse data types make it a valuable asset for organizations seeking to unlock the full potential of their big data.

Elements of a Data Lakehouse

Data lakehouses have a range of elements to support organizations’ data management and analysis needs.

- A key element is the ability to store and process a variety of data types including structured, semi-structured, and unstructured data.

- They provide a centralized repository for storing data, allowing organizations to store all of their data in one place, making it easier to manage and analyze.

- The data management layer enables data to be governed, secured, and transformed as needed.

- The data processing layer provides analytics and machine learning capabilities, allowing organizations to quickly and effectively analyze their data and make data-driven decisions.

- Another important element of a data lakehouse is the ability to provide real-time processing and analysis, which enables organizations to respond quickly to changing business conditions.

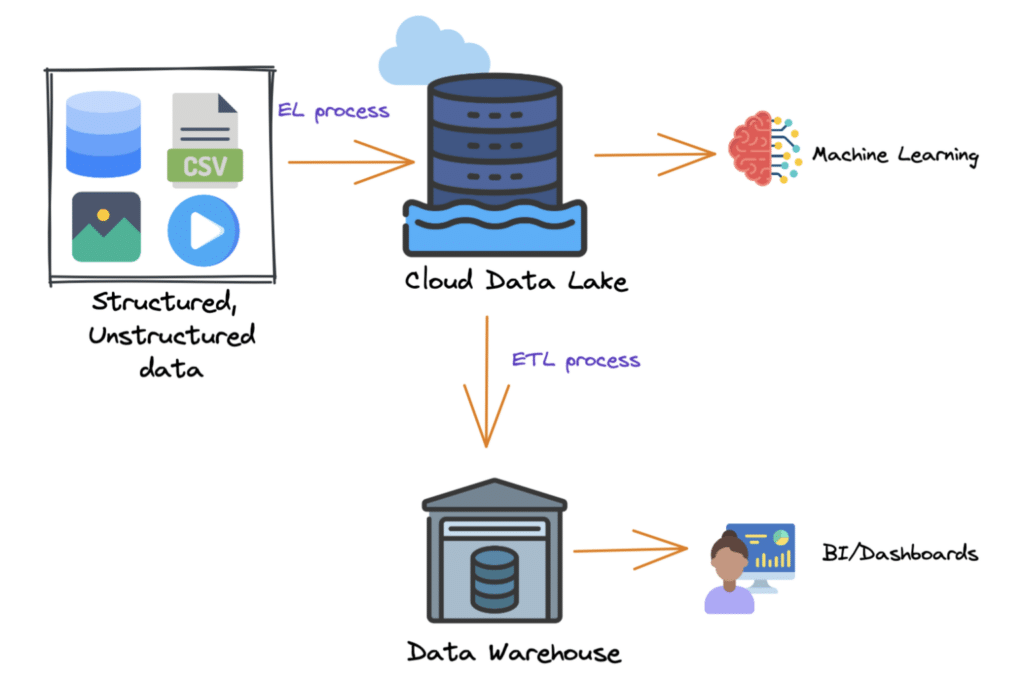

Cloud Data Lake

Data lakehouses are often spoken in tandem with cloud data lakes and cloud data warehouses. With the increasing adoption of cloud-based solutions, many organizations have turned to cloud data lakes to build their data platforms.

Cloud data lakes provide organizations with the flexibility to scale storage and compute components independently, thereby optimizing their resources and improving their overall cost efficiency. By separating storage and computing, organizations can store any amount of data in open file formats like Apache Parquet and then use a computing engine to process the data. Additionally, the elastic nature of cloud data lakes enables workloads – like machine learning – to run directly on the data without needing to move data out of the data lake.

Despite the many benefits of cloud data lakes, there are also some potential drawbacks:

- One challenge is ensuring the quality and governance of data in the lake, particularly as the volume and diversity of data stored in the lake increases.

- Another challenge is the need to move data from the data lake to downstream applications – such as business intelligence tools – which often require additional data copies and can lead to job failures and other downstream issues.

- Additionally, because data is stored in raw formats and written by many different tools and jobs, files may not always be optimized for query engines and low-latency analytical applications.

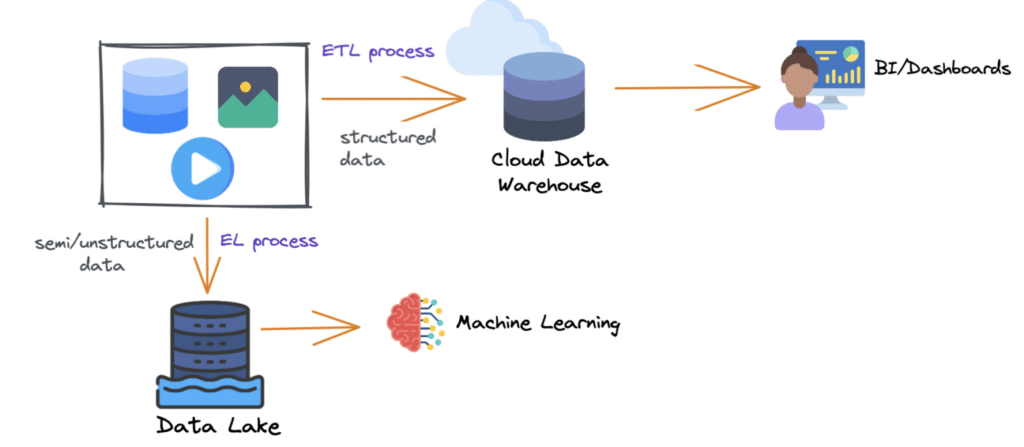

Cloud Data Warehouse

The first generation of on-premises data warehouses provide businesses with the ability to derive historical insights from multiple data sources. However, this solution required significant investments in terms of both cost and infrastructure management. In response to these challenges, the next generation of data warehouses leveraged cloud-based solutions to address these limitations.

One of the primary advantages of cloud data warehouses is the ability to separate storage and computing, allowing each component to scale independently. This feature helps to optimize resources and reduce costs associated with on-premises physical servers.

However, there are also some potential drawbacks to using cloud data warehouses:

- While they do reduce some costs, they can still be relatively expensive.

- Additionally, running any workload where performance matters often requires copying data into the data warehouse before processing, which can lead to additional costs and complexity.

- Moreover, data in cloud data warehouses is often stored in a vendor-specific format, leading to lock-in/lock-out issues, although some cloud data warehouses do offer the option to store data in external storage.

- Finally, support for multiple analytical workloads, particularly those related to unstructured data like machine learning, is still unavailable in some cloud data warehouses.

Future of the Data Lakehouse

Upon discussion of data lakehouses, their elements, and what they do, it’s only natural to look at the implications of this technology moving forward. The future looks very promising, as more and more organizations are embracing big data and the need for flexible, scalable, and cost-effective solutions for managing it continues to grow.

In the coming years, expect to see increased adoption of data lakehouses, with organizations of all sizes and across all industries recognizing their value in providing a unified platform for managing and analyzing big data.

Additionally, expect to see continued innovation and advancements in data lakehouse technology, such as improved data processing and transformation capabilities, enhanced security and governance features, and expanded integration with other data management tools and technologies.

The rise of machine learning and artificial intelligence will drive the need for flexible and scalable big data platforms that can support the development and deployment of these advanced analytics models.

The future of data lakehouses will also be influenced by the increasing importance of data privacy and security, and we can expect to see data lakehouses evolving to meet these new requirements, including better data masking and data encryption capabilities. Overall, the future of data lakehouses looks bright, and they are likely to play an increasingly critical role in helping organizations extract value from their big data.

Sign up for AI Ready Data content