8 minute read · June 11, 2024

The Value of Dremio’s Semantic Layer and The Apache Iceberg Lakehouse to the Snowflake User

· Senior Tech Evangelist, Dremio

When it comes to processing data for analytics and AI, one of the most popular and ubiquitous platforms is Snowflake. It improves the lives of its users with a user-friendly platform that allows:

- Purchasing and selling datasets through their marketplace.

- Designing AI apps using Python with Snowpark.

Snowflake is widely popular for good reason. However, with the advent of the Apache Iceberg data lakehouse, even more possibilities emerge. Incorporating other platforms like Dremio can enhance your Snowflake arsenal with additional superpowers.

What is the Data Lakehouse?

Data Lakehouse architecture is an approach where you use your existing data lake storage as the center of truth for your data. This is made possible by leveraging table formats like Apache Iceberg, which allow your data to be treated as database tables with ACID transactions anywhere the format is supported. Additionally, with an open table format, you can pair an open catalog like Nessie (which also powers Dremio's Enterprise Catalog) or Polaris (Which will also powers Snowflakes Native Iceberg catalog) to track your tables, views, governance, and other elements, making them portable between tools that work on your tables. Snowflake and Dremio support Apache Iceberg lakehouses, making them prime tools for executing such an architecture.

What is Dremio?

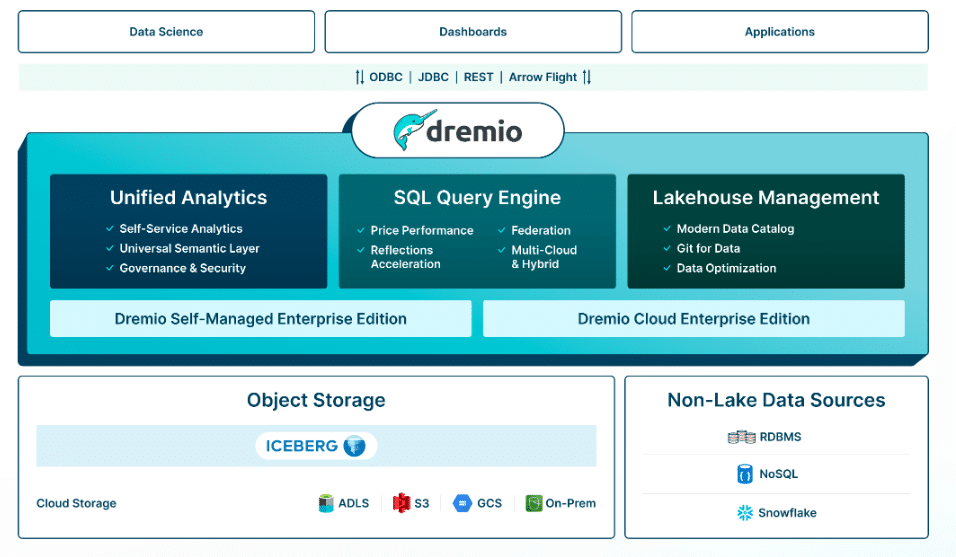

Dremio is a Lakehouse platform specifically catering to enabling Lakehouse architecture with your data lake. It provides value through three main categories:

- Unified Intelligent Semantic Layer: Connect databases, data lakes, and data warehouses to Dremio to create a unified access point for your data, unifying both cloud and on-premises sources. This is particularly useful for Snowflake customers looking to connect on-premises sources to their cloud data lake.

- SQL Query Engine: Dremio can federate queries across all these sources and execute those queries with industry-leading performance by leveraging Apache Arrow and its unique feature, data reflections.

- Lakehouse Management: Dremio Cloud includes features that simplify managing a data lakehouse, whether through fine-grained governance or table maintenance operations, making it easy and turnkey to manage Apache Iceberg tables on your data lake.

Dremio makes Apache Iceberg data lakes easy, fast, and open. It creates a complete data analytics and AI platform when paired with Snowflake. One of the features that has long set Dremio apart is its incorporation of a semantic layer, which helps make data more accessible and catalyzes Dremio's reflections features. This feature can be crucial for Snowflake users to unify disparate data with their Snowflake warehouse.

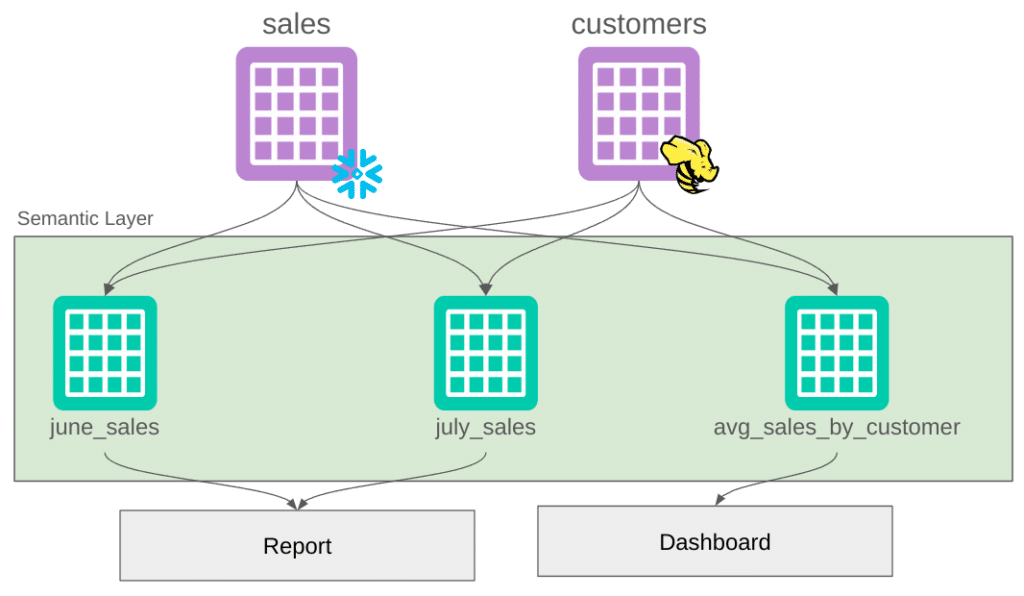

What is a Semantic Layer and What Makes Dremio’s Semantic Layer Special?

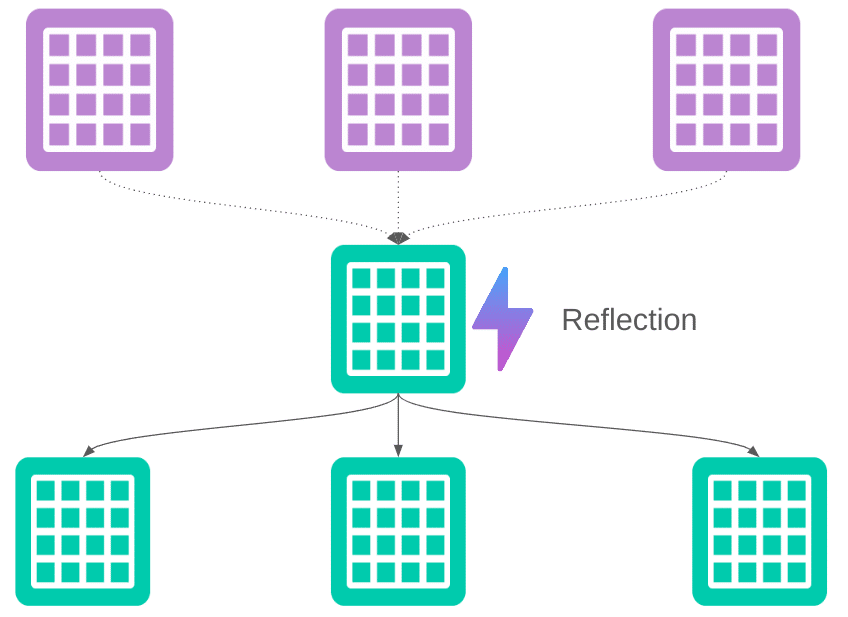

A semantic layer is a feature that tracks your business metrics and views—the objects that contain your business logic, which users understand and need to run their analytics and models. With Dremio, these objects can result from processing data across several data sources, allowing you to define these metrics once and access them in your Python notebooks, BI tools, and data applications. Since the semantic layer is curated within Dremio, its Reflections feature can deliver query optimization that decoupled tooling couldn't achieve. Reflections replace materialized views and cubes with an Apache Iceberg-based relational cache, providing acceleration not just from caching optimized versions of the data but also from the Iceberg metadata that allows even more performant querying of that cache.

For example, if you create a reflection on View A (a view that is there result of joining several tables), which has View B, View C and View D derived from it, Dremio can intelligently leverage the reflection on View A for queries on A, B, C and D since it is aware of the relationship between the views. Dremio's semantic layer not only allows you to define and govern your business logic across all your data in one place, but also provides Dremio with the contextual understanding of your data for cutting-edge data acceleration across data in many places.

Conclusion

In conclusion, while Snowflake continues to be a centerpiece of data processing for analytics and AI, integrating Apache Iceberg and Dremio can significantly enhance its capabilities. The Data Lakehouse architecture, powered by Apache Iceberg, offers a robust foundation for treating data on the lake as database tables with ACID transactions, ensuring consistency and reliability. Dremio complements this architecture by providing unified analytics, an efficient SQL query engine, and comprehensive lakehouse management. Its semantic layer, in particular, stands out by making data more accessible and optimizing queries through reflections. For Snowflake users, incorporating Dremio and Apache Iceberg means unifying disparate data sources and achieving unprecedented data acceleration and efficiency. These tools create a powerful, integrated platform for advanced data analytics and AI, making them an invaluable addition to any data strategy.

Get Started with Dremio Today!

Here are Some Exercises for you to See Dremio’s Features at Work on Your Laptop

- Intro to Dremio, Nessie, and Apache Iceberg on Your Laptop

- From SQLServer -> Apache Iceberg -> BI Dashboard

- From MongoDB -> Apache Iceberg -> BI Dashboard

- From Postgres -> Apache Iceberg -> BI Dashboard

- From MySQL -> Apache Iceberg -> BI Dashboard

- From Elasticsearch -> Apache Iceberg -> BI Dashboard

- From Kafka -> Apache Iceberg -> Dremio