12 minute read · June 12, 2024

3 Reasons to Create Hybrid Apache Iceberg Data Lakehouses

Data Lakehouse architecture is becoming a necessity to optimize cost, performance, and agility of your data. There are many pros and cons to having a cloud-based data lakehouse using cloud vendors like AWS, Azure, Google, and Snowflake. Similarly, there are pros and cons to having an on-premises (on-prem) data lakehouse with storage vendors like Vast, NetApp, and MinIO. More and more people are taking the path of a Hybrid Data Lakehouse, where some of their data is in the cloud and other data is located on-prem. These hybrid setups can be unified for various use cases using a Data Lakehouse platform like Dremio that can connect to both cloud and on-prem sources, often stored in the Apache Iceberg format for additional portability and performance. In this article, let's break down the benefits of cloud and on-prem data lakehouses and why Hybrid may be the right choice for you.

Benefit #1: Cost Optimization

One of the primary reasons to consider a hybrid data lakehouse is the potential for cost optimization. Cloud storage solutions often have scalable pricing models that can grow with your data needs. However, storing massive amounts of data in the cloud can become expensive over time due to ongoing storage and data egress costs. On-prem storage, however, allows for more predictable and potentially lower long-term costs, especially if you already have existing infrastructure.

A hybrid approach lets you strategically place your data where it is most cost-effective. Frequently accessed data or data requiring heavy computational resources can be kept in the cloud, benefiting from the cloud's elasticity and scalability. Meanwhile, archival or less frequently accessed data can reside on-prem, reducing ongoing costs. By leveraging both environments, you can achieve a balance that optimizes your overall data storage and processing expenses.

Benefit #2: Performance and Latency

Performance and latency are critical factors in data processing and analytics. Cloud data lakehouses offer robust performance with the ability to scale resources up or down based on demand. However, this can sometimes come with latency issues, especially if the data and compute resources are not in the same region or if your organization has stringent requirements for data access speeds.

On-prem data lakehouses can offer lower latency since the data and compute resources are physically closer, leading to faster data retrieval times. This is particularly beneficial for workloads requiring real-time processing or where data sovereignty is a concern. The hardware in on-prem lakehouses can be even more granularly optimized for workloads to offer even greater levels of performance enhancement.

You can benefit from the best of both worlds in a hybrid setup. Critical applications and data that require low latency can be handled on-prem, while the cloud can be used for more scalable, compute-intensive tasks. By strategically placing your workloads, you can enhance overall performance and meet specific latency requirements without compromising on scalability.

Benefit #3: Agility and Flexibility

Agility and flexibility are essential in today's fast-paced business environment. Cloud platforms provide unmatched agility, allowing you to quickly spin up new services, scale resources, and deploy updates with minimal downtime. This flexibility is invaluable for development, testing, and deployment cycles, enabling faster time-to-market for new applications and features.

While potentially slower to adapt, on-prem solutions offer greater control over your environment, which can be crucial for certain regulatory and compliance requirements. They also allow for custom configurations that might not be possible in a cloud environment.

A hybrid data lakehouse leverages the agility of the cloud while maintaining the control and customization capabilities of on-prem solutions. This approach provides the flexibility to respond to changing business needs dynamically, ensuring that your data infrastructure can support both current and future demands. By integrating platforms like Dremio with Apache Iceberg, you can unify your data across cloud and on-prem environments, allowing seamless data management and analytics with enhanced portability and performance.

The Components of a Hybrid Data Lakehouse

A Hybrid Data Lakehouse leverages cloud and on-premises resources to provide a flexible, cost-effective, high-performance data architecture. The critical components of a hybrid data lakehouse include the Cloud Data Lake, Data Lakehouse Platform, and On-Prem Data Lake. Let’s explore each of these components and their unique contributions.

Cloud Data Lake

Cloud providers such as AWS, Google Cloud, and Azure offer robust data storage solutions that form the backbone of the cloud portion of a hybrid data lakehouse.

These cloud platforms offer the flexibility to scale resources up or down based on demand, ensuring that your data lake can handle varying workloads efficiently. They provide the elasticity needed for dynamic data processing tasks, development, and testing environments.

Data Lakehouse Platform

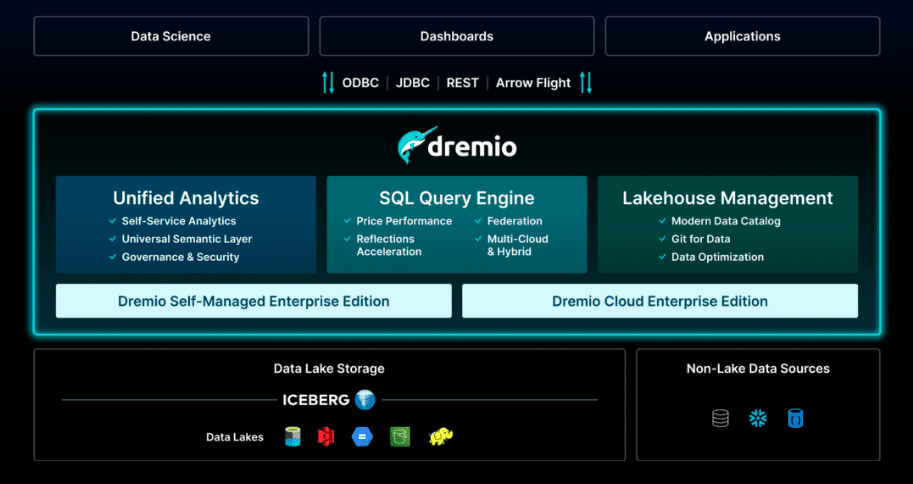

Dremio serves as a powerful data lakehouse platform that connects cloud and on-prem data sources, enabling seamless data management and analytics.

Dremio leverages the Apache Iceberg table format to ensure data portability and optimized performance. Dremio offers features like query acceleration, data reflections, and a self-service semantic layer that allows users to perform fast, interactive analytics on large datasets without complex ETL processes. By integrating with Apache Iceberg, Dremio ensures that data is efficiently managed and queried, regardless of its location.

Dremio's ability to connect to cloud and on-prem data lakes, along with its robust query optimization capabilities, makes it a crucial component of a hybrid data lakehouse architecture. It provides a unified view of data, allowing users to access and analyze their data seamlessly across different environments.

On-Prem Data Lake

On-prem data lakes, using solutions from vendors like Vast Data, NetApp, and MinIO, provide the local storage and compute power necessary for handling sensitive or latency-sensitive data.

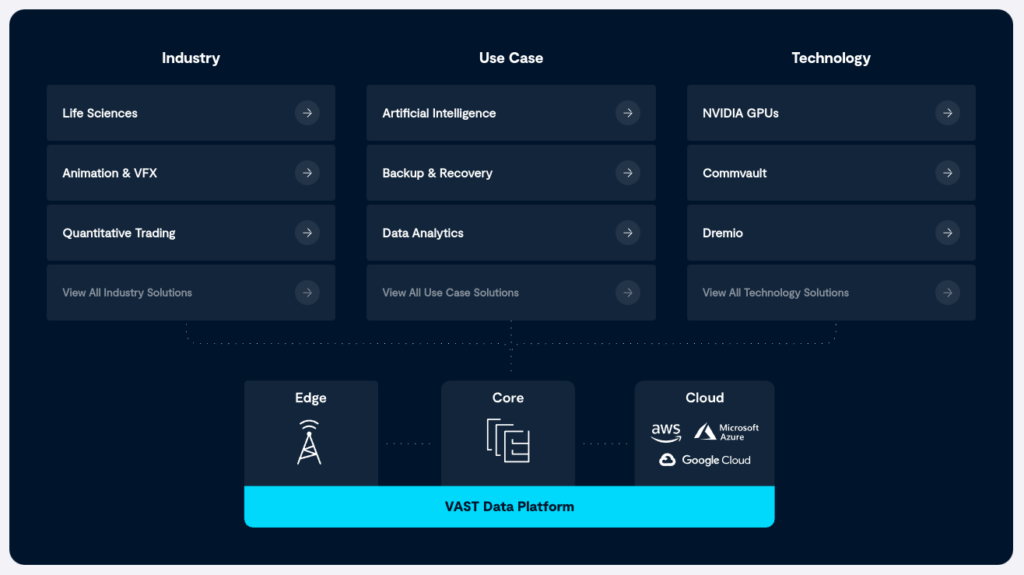

Vast Data: Vast Data offers a high-performance, scalable storage solution that combines the benefits of flash storage with the economics of hard drives. Its Universal Storage architecture provides a single tier of storage that delivers low latency, high throughput, and massive scalability. Vast Data’s solutions are ideal for environments requiring high-speed data access and robust performance, such as AI and machine learning workloads. Vast Data also offers services for edge-to-cloud computing, a high-performance database and a serverless function framework for creating powerful and unique event based workflows.

NetApp: NetApp offers StorageGRID, a highly scalable and flexible object storage solution designed to manage massive amounts of unstructured data. StorageGRID integrates seamlessly with various cloud services, providing a hybrid model that balances on-premises and cloud storage. Its advanced features, such as policy-driven data management, metadata tagging, and intelligent data placement, ensure optimal performance and cost efficiency. NetApp’s solutions are ideal for organizations looking to implement a hybrid data lakehouse with robust on-premises storage capabilities.

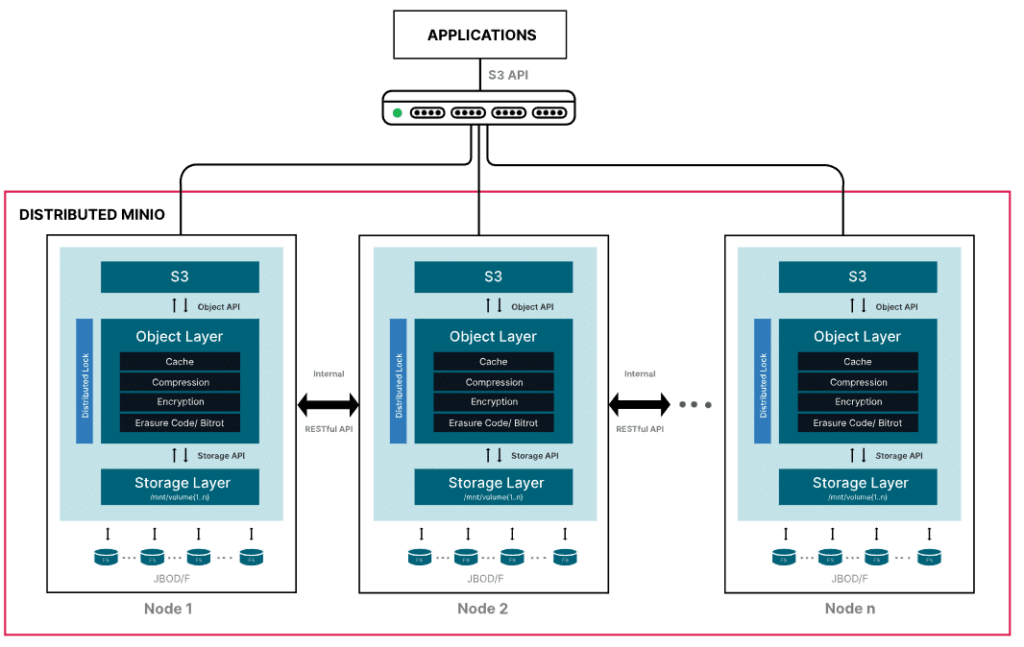

MinIO: MinIO offers a high-performance, software-defined object storage system that is compatible with the Amazon S3 API. MinIO is designed for large-scale data environments, providing high throughput and scalability. Its simplicity, speed, and cloud-native architecture make it an excellent choice for on-prem data lakes that need to integrate with cloud storage solutions seamlessly. MinIO is particularly valued for its ease of deployment, robust security features, and compatibility with a wide range of data tools.

Each of these on-prem solutions provides unique advantages, making them valuable components of a hybrid data lakehouse. Vast Data excels in performance and scalability, NetApp offers comprehensive data management and hybrid integration, and MinIO provides high-performance object storage with cloud-native capabilities.

Conclusion

Hybrid Apache Iceberg Data Lakehouses represent the future of data architecture, combining the strengths of both cloud and on-prem solutions to deliver optimal cost, performance, and agility. By strategically leveraging the benefits of each environment, organizations can build a robust, flexible, and efficient data infrastructure. Platforms like Dremio facilitate this hybrid approach by connecting to various data sources and utilizing the Apache Iceberg format, ensuring that your data is always accessible and performant, regardless of where it resides. Whether you are looking to optimize costs, enhance performance, or achieve greater agility, a hybrid data lakehouse could be the perfect solution for your data needs.

If you want to discuss the architecture for your hybrid data lakehouse, let’s have a meeting!

Here are Some Exercises for you to See Dremio’s Features at Work on Your Laptop

- Intro to Dremio, Nessie, and Apache Iceberg on Your Laptop

- From SQLServer -> Apache Iceberg -> BI Dashboard

- From MongoDB -> Apache Iceberg -> BI Dashboard

- From Postgres -> Apache Iceberg -> BI Dashboard

- From MySQL -> Apache Iceberg -> BI Dashboard

- From Elasticsearch -> Apache Iceberg -> BI Dashboard

- From Kafka -> Apache Iceberg -> Dremio