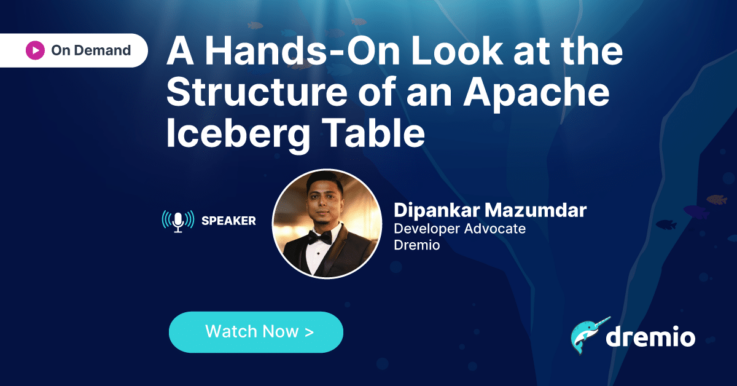

Dipankar is currently a Developer Advocate at Dremio where his primary focus is advocating data practitioners such as engineers, architects & scientists on Dremio’s lakehouse platform & various open-sourced projects such as Apache Iceberg, Arrow, etc. that helps data teams apply & scale analytics. In his past roles, he worked at the intersection of Machine Learning & Data visualization. Dipankar holds a Masters in Computer Science and his research area is Explainable AI.