44 minute read · May 8, 2019

Running SQL Based Workloads in The Cloud at 20x – 200x Lower Cost Using Apache Arrow

Webinar Transcript

Jacques:All right. Let’s get started. Hi there everybody. I’m Jacques, we’ll be talking about Running SQL Based Workloads Faster Using Apache Arrow. Quickly about myself, I am co founder and CTO of Dremio. Dremio is company has been around for about four years. We’re downstairs in the call if you want to know more about Dremio and I’ll talk about some of the things that we use inside of Dremio with Arrow in this talk.I’m also the PMC Chair of the Apache Arrow Project. Apache Arrow started about three years ago, when myself and Wes McKinney got together and realized that data scientists and database people needed to get closer together and work well together, and so that’s what a lot of this is built on top of.Let’s start out. What I want to talk about here is, if you think about performance, performance by itself is actually meaningless in the cloud, like on prem. When you have fixed resources, it’s all about like how much power, and how much speed can I get out of this set of resources? In the cloud, I can always add more resources. Really, performance is about cost. How can I balance my performance and my cost most effectively?In many cases, I can scale but I’d rather not just spend millions of dollars on my infrastructure. Rather than talk about, “Hey, look how fast things are,” which is what this talk could be, I’m going to talk about a specific set of things that we do to make things faster and why they work, and give you an overview on the technical side for those things. But really, different kinds of use cases and different kinds of challenges have different sets of technical solutions to those things.For a particular use case, some of these solutions may not be appropriate, and others may be appropriate. For some use cases, all of them may be appropriate, so it’s really a set of tools that you’re going to be thinking about in terms of how you’re solving your problems, and which tools can you bring to bear for this particular set of challenges.The last thing is that if you think about the sort of way that people are approaching things, one of the things that I think is really important is that you want to think about things to be sort of loosely coupled. Think about the old-school way of doing things, such as building a data warehouse, letting everything into the data warehouse, and doing everything inside of the data warehouse. There were some nice things about that. But in reality, we have a much more complicated sort of data ecosystem today.Being able to loosely couple those things and solve things whether or not you’ve loaded the data, whether you’ve interacted with the data before – all those things become very important. Let’s talk briefly about Dremio and Arrow.Arrow, as I mentioned before, has been around about three years, and has seen huge adoption;it’s really sort of beyond everybody’s expectations, including my own in terms of how well it’s gone. The goal with Arrow was really to try to provide a common way to represent data and a way to process data very efficiently. It’s been incorporated into a bunch of different projects. Some of them you probably know – Python, Spark, NVIDIA and their GPU RAPIDS initiative also use Arrow. At Dremio, we use Arrow extensively.Really what Arrow is about is about, is that it allows you to have this loosely-coupled set of technologies that work together and achieve faster analytical performance. The second thing is Dremio. I’m going to be talking about that and talking about Arrow in the context of Dremio on how to make things go faster.Dremio is a way to interact with data, find data, access it, share it, curate it, and collaborate with others on it. It’s built for the cloud, and more than half of all Dremio usage is in the cloud, whether that’s Azure, AWS, or Google. At its core, Arrow is a processing engine for data. Inside of Dremio, everything that we do is against the Arrow representation of data, and really it’s trying to provide a SQL for any source.No matter whether you’re interacting with a relational database, or non relational database, a NoSQL system, a data lake, or cloud storage – all those things should have a common interface that allows people to think about how they’re interacting with the data, rather than the mechanics behind how that data is being stored.There’s an Open Source and an Enterprise Edition, so you can go download the Open Source Community Edition and play with it all you want. Everything that I’m demonstrating here is something that you can try out in the Community Edition. One of the key things that we approach a little differently is trying to integrate multiple technologies together.There are lots of solutions out there that are trying to solve what I would describe as a niche. I want to just curate it and make those datasets available for analysis. I want to figure out what all my data sets are, and catalog those, and share those with my colleagues. I want to accelerate the performance of access to data, and I want to federate multiple sources together. Those are all really useful things to be able to achieve. But if you can combine them together, we think that you have a much better experience in terms of how you’re trying to achieve your goals, which is to get business value out of your data.These are the four things I’m going to talk about. I’m going to go through each of these and talk about how they work and how they can impact your workload. The first one is Gandiva. Gandiva is a processing initiative that was built in Dremio and then we contributed to the Arrow project, so that anybody can use it if they’re working with Arrow data. Then secondly, I’ll talk about Column-Aware, Predictive Coalescing IO, and how that can substantially impact your performance working in the cloud. Then, I’ll talk about what we call a Columnar Cloud Cache or C3, which is about how you can cache portions of data to make things go faster, and then I’ll also talk about Arrow Flight, and how that can accelerate the access of data from whatever application it is that is consuming the data at the end of the day.Let’s start with Gandiva. I didn’t go into detail about Arrow; I think that I’ve done enough talk, so hopefully most of you have some sense of what Arrow is. But let me talk about Gandiva. Arrow is a representation, at its core, of data and it’s language-agnostic in its representation.The goal behind Arrow was to basically have a better way of moving data between systems. The way we figured out how to try to solve the transport problem was to solve it for processing by saying, “Hey, if two systems have a common way of representing data for processing purposes, then it can be very efficient to move data between those systems.”Arrow’s designed first and foremost for processing, even though it allows communication between different systems. Gandiva delivers on the promise of that by basically saying, “Hey, if we have a canonical representation of data, then we can do really efficient things in terms of how we process that data. I don’t have to work through any different APIs to interact with that data.”

Then secondly, I’ll talk about Column-Aware, Predictive Coalescing IO, and how that can substantially impact your performance working in the cloud. Then, I’ll talk about what we call a Columnar Cloud Cache or C3, which is about how you can cache portions of data to make things go faster, and then I’ll also talk about Arrow Flight, and how that can accelerate the access of data from whatever application it is that is consuming the data at the end of the day.Let’s start with Gandiva. I didn’t go into detail about Arrow; I think that I’ve done enough talk, so hopefully most of you have some sense of what Arrow is. But let me talk about Gandiva. Arrow is a representation, at its core, of data and it’s language-agnostic in its representation.The goal behind Arrow was to basically have a better way of moving data between systems. The way we figured out how to try to solve the transport problem was to solve it for processing by saying, “Hey, if two systems have a common way of representing data for processing purposes, then it can be very efficient to move data between those systems.”Arrow’s designed first and foremost for processing, even though it allows communication between different systems. Gandiva delivers on the promise of that by basically saying, “Hey, if we have a canonical representation of data, then we can do really efficient things in terms of how we process that data. I don’t have to work through any different APIs to interact with that data.” If you think about old ways of interacting with it, it was very much API-based, where you’d call up an interface and say, “Hey, give me the next record, give me an Excel on this record.” That worked well because you could build an application and the internals didn’t matter to you. But the problem there is that if you’re doing huge amounts of processing, then all those interactions, all those method calls and invocations can incur a substantial performance penalty.If you can have a representation of data that is very well known and interact directly with that memory representation, then you can be in a lot of things. That’s really what Gandiva is about – it’s about high-speed compute, and we leverage it inside of Dremio. If you download Dremio today, we’re using Gandiva for processing, and that’s one of the key reasons that we process it as fast as we do. It is built primarily for CPUs today, but the vision is actually to also extend it to GPUs in the future.At its core, the Gandiva is built on top of LLVM, and so it will take an expression tree and compile that down and then process that expression. The expression tree is generated inside of whatever application you have. You compile that down with the Gandiva compiler into an actual piece of execution code. Then the execution code does something very simple.The execution code is simply responsible for taking in batches of Arrow data, and then outputting in batches of Arrow data. The complexity is all in how we compile this information. But how it actually plugs into the application is quite simple in that you just say, “Hey, I’ve got a stream of Arrow data, and I can apply this operation on top of it, and now I’ve got a new stream of Arrow data.”What does it actually do? It supports an arbitrary set of expressions. When you think about compilation inside of a query execution – there are lots of different operations you might be doing a hash join, you might be doing an aggregation, you might be doing some kind of expression calculation.If you look at those different things, things like aggregations and joins don’t need that much runtime compilation to perform well. The reason being is that the patterns and where they spend the time are things that are fairly consistent across different people using those operations. Okay?With expression evaluation, runtime compilation becomes extremely critical to performance, because every single expression that someone’s writing is going to be a little different. A plus B plus C or A plus B divided by C are going to be different expressions, and I want to figure out what’s the fastest way to run each of those things and compile those things. That’s really what Gandiva is about – it’s saying, “Hey, whatever arbitrary expression you have, I can compile that very, very efficiently.” Okay?The simple version of this is A plus B;that’s a pretty straightforward thing. It’s actually primitives inside of LLVM that allow that basic operation, but then you have to apply things like SQL semantics on top of that. SQL semantics include the consideration of null availability and how that impacts the different operations. How do I calculate that correctly?

If you think about old ways of interacting with it, it was very much API-based, where you’d call up an interface and say, “Hey, give me the next record, give me an Excel on this record.” That worked well because you could build an application and the internals didn’t matter to you. But the problem there is that if you’re doing huge amounts of processing, then all those interactions, all those method calls and invocations can incur a substantial performance penalty.If you can have a representation of data that is very well known and interact directly with that memory representation, then you can be in a lot of things. That’s really what Gandiva is about – it’s about high-speed compute, and we leverage it inside of Dremio. If you download Dremio today, we’re using Gandiva for processing, and that’s one of the key reasons that we process it as fast as we do. It is built primarily for CPUs today, but the vision is actually to also extend it to GPUs in the future.At its core, the Gandiva is built on top of LLVM, and so it will take an expression tree and compile that down and then process that expression. The expression tree is generated inside of whatever application you have. You compile that down with the Gandiva compiler into an actual piece of execution code. Then the execution code does something very simple.The execution code is simply responsible for taking in batches of Arrow data, and then outputting in batches of Arrow data. The complexity is all in how we compile this information. But how it actually plugs into the application is quite simple in that you just say, “Hey, I’ve got a stream of Arrow data, and I can apply this operation on top of it, and now I’ve got a new stream of Arrow data.”What does it actually do? It supports an arbitrary set of expressions. When you think about compilation inside of a query execution – there are lots of different operations you might be doing a hash join, you might be doing an aggregation, you might be doing some kind of expression calculation.If you look at those different things, things like aggregations and joins don’t need that much runtime compilation to perform well. The reason being is that the patterns and where they spend the time are things that are fairly consistent across different people using those operations. Okay?With expression evaluation, runtime compilation becomes extremely critical to performance, because every single expression that someone’s writing is going to be a little different. A plus B plus C or A plus B divided by C are going to be different expressions, and I want to figure out what’s the fastest way to run each of those things and compile those things. That’s really what Gandiva is about – it’s saying, “Hey, whatever arbitrary expression you have, I can compile that very, very efficiently.” Okay?The simple version of this is A plus B;that’s a pretty straightforward thing. It’s actually primitives inside of LLVM that allow that basic operation, but then you have to apply things like SQL semantics on top of that. SQL semantics include the consideration of null availability and how that impacts the different operations. How do I calculate that correctly? The Gandiva expression library probably has 1000 or several thousand expressions now; it’s basically supporting filtering and projecting operations to apply a set of conditions to a set of Arrow records.So, how does it perform? Well, this goes to the Arrow representation. For those of you that don’t remember or need a reminder. The Arrow representation of data is a columnar representation of data is columnar in memory, right? Parquet work those are columnar on disk representations.Most systems typically will bring data in memory as a worldwide representation. They take advantage of the underlying IO capabilities by using columnar there, but they don’t actually take advantage of the CPU capabilities around factorization by using columnar there. Arrow was trying to change that by basically saying, “Hey, the best representation in memory is also columnar.”Now, let’s talk about the representation of an Arrow integer. Let’s say we’re doing an A plus B operation. An Arrow integer is, let’s say, a four-byte integer – A and B are both four-byte integers. The Arrow representation of that is actually two independent chunks of memory for each of those things. A four-byte integer is actually a four-byte integer end-to-end each of the different values, and then separately from that there’s a second structure, which is a bitmap of whether each value is valid or not. It describes the nullability of each of those guys.When I’m doing A plus B, I’m actually working with four data structures; they have validity and the data for each of A and B. Okay? Because we know that’s the representation inside of the LVM code and the Gandiva operations, we can actually take advantage of this and start doing null decomposition, and work with null values and how we deal with null resolution completely independent of the data itself.In this example here, so we’ve got a validity and data vector for A, and we’ve got a validity and data vector for B. We can actually take the validity of vectors, and in the end of the validity vectors we’ll find what is the validity of the output according to SQL’s null in/null out semantics. SQL standard semantic is, if one of my arguments is null, then my output is null.Normally, when you would write code to solve this problem, you would actually write a bunch of conditional statements. You would say, if A is null then null, if B is null then null L = A plus B. Okay? But if you know CPUs well, you know that all of this branching logic can substantially reduce the pipeline of the CPU. Instead of doing that, you decompose and see these two different structures and you can say, “Okay, I’m going to do a bitwise end. Okay? That’s going to allow me to work on each word, most likely, or maybe larger than a word if I get sent the instructions.”But let’s say with each word, I can do 64 value calculations at once, where I say, “Hey, I’m going to take these 64 values and these 60 word values and do a bitwise, and now I know the nullability of those 64 output values, right? Then separately, I can do this SIMDadd for the data. Okay? I can add the two vectors together. The simplest version of an add would be, “Hey, let me just loop through these things and add them.” A more complex one would be “Hey, I can work with things that are wider than four bites. And so I’m actually going to add multiple things together with the largest of the operations.”But in either case, there’s no conditions there either. What happens then is that means that this entire logic is entirely there’s no conditions anywhere in this logic, no branch anywhere in this logic. The performance can be substantially faster. Plus, when we have SIMD, we can take advantage of those operations. Okay? This is one very trivial example of how you can customize what’s going on in the compilation to improve the performance of things.This is one example of null management. Nulls are big problem in terms of processing to improve performance. Inside of Gandiva, there are basically a whole set of primitives around how nulls are managed. There are expressions where null is null. There are expressions where I’m always a non-null output, even if I have a null input. There are expressions where, depending on what’s going on, maybe a nullable input is not a nullable input. Case statements are actually a really interesting thing when you’re applying the null semantics to it to improve performance.Basically, inside of Gandiva is all the logic to deal with all of these things, so you can just build an expression that’s going to be working against the Arrow data, and then have a very good performance. As I mentioned, the other thing you can do is, because these representations are all columnar in nature, they’re actually designed specifically to be consistent with representations that the underlying CPU expects.If you want to do a SIMD operation, where you’re adding multiple values, here’s an example of a bunch of two-byte integers. I’ve got a selection of two-byte integers, A and B. This is a two-byte version instead of a four-byte version. I’m going to add them together. When I do that, I could do it where I read the first integer on each side and then add those together. Then I read the second integer on each side and add those two together, right? It’s going to be the number of reads plus the number of adds, and so it’s going to be two 3x the number of records.But the reality is that CPUs are better at this than that. We can instead take the CPU and say, “Hey, CPU, you know how to do 128 bit adds at one time, where these things are co-located inside of these chunks of memory, and do that all together.” All of the sudden, this becomes three instructions instead of 24 instructions. It’s 8x improvement in the number of instructions that we have to apply here to complete this operation.Now, it’s not something that you actually have to worry about once you’ve got something like Gandiva to solve the problem, but this is why Gandiva can go more quickly than other things. The core of this is that the underlying representation also lines up with this. If step one was “reformat my data into a data representation that the CPU could understand to do SIMD operations. Then if step two is to take advantage of the SIMD operation, you’re going to lose a lot of the benefits you could otherwise gain by using these operations.

The Gandiva expression library probably has 1000 or several thousand expressions now; it’s basically supporting filtering and projecting operations to apply a set of conditions to a set of Arrow records.So, how does it perform? Well, this goes to the Arrow representation. For those of you that don’t remember or need a reminder. The Arrow representation of data is a columnar representation of data is columnar in memory, right? Parquet work those are columnar on disk representations.Most systems typically will bring data in memory as a worldwide representation. They take advantage of the underlying IO capabilities by using columnar there, but they don’t actually take advantage of the CPU capabilities around factorization by using columnar there. Arrow was trying to change that by basically saying, “Hey, the best representation in memory is also columnar.”Now, let’s talk about the representation of an Arrow integer. Let’s say we’re doing an A plus B operation. An Arrow integer is, let’s say, a four-byte integer – A and B are both four-byte integers. The Arrow representation of that is actually two independent chunks of memory for each of those things. A four-byte integer is actually a four-byte integer end-to-end each of the different values, and then separately from that there’s a second structure, which is a bitmap of whether each value is valid or not. It describes the nullability of each of those guys.When I’m doing A plus B, I’m actually working with four data structures; they have validity and the data for each of A and B. Okay? Because we know that’s the representation inside of the LVM code and the Gandiva operations, we can actually take advantage of this and start doing null decomposition, and work with null values and how we deal with null resolution completely independent of the data itself.In this example here, so we’ve got a validity and data vector for A, and we’ve got a validity and data vector for B. We can actually take the validity of vectors, and in the end of the validity vectors we’ll find what is the validity of the output according to SQL’s null in/null out semantics. SQL standard semantic is, if one of my arguments is null, then my output is null.Normally, when you would write code to solve this problem, you would actually write a bunch of conditional statements. You would say, if A is null then null, if B is null then null L = A plus B. Okay? But if you know CPUs well, you know that all of this branching logic can substantially reduce the pipeline of the CPU. Instead of doing that, you decompose and see these two different structures and you can say, “Okay, I’m going to do a bitwise end. Okay? That’s going to allow me to work on each word, most likely, or maybe larger than a word if I get sent the instructions.”But let’s say with each word, I can do 64 value calculations at once, where I say, “Hey, I’m going to take these 64 values and these 60 word values and do a bitwise, and now I know the nullability of those 64 output values, right? Then separately, I can do this SIMDadd for the data. Okay? I can add the two vectors together. The simplest version of an add would be, “Hey, let me just loop through these things and add them.” A more complex one would be “Hey, I can work with things that are wider than four bites. And so I’m actually going to add multiple things together with the largest of the operations.”But in either case, there’s no conditions there either. What happens then is that means that this entire logic is entirely there’s no conditions anywhere in this logic, no branch anywhere in this logic. The performance can be substantially faster. Plus, when we have SIMD, we can take advantage of those operations. Okay? This is one very trivial example of how you can customize what’s going on in the compilation to improve the performance of things.This is one example of null management. Nulls are big problem in terms of processing to improve performance. Inside of Gandiva, there are basically a whole set of primitives around how nulls are managed. There are expressions where null is null. There are expressions where I’m always a non-null output, even if I have a null input. There are expressions where, depending on what’s going on, maybe a nullable input is not a nullable input. Case statements are actually a really interesting thing when you’re applying the null semantics to it to improve performance.Basically, inside of Gandiva is all the logic to deal with all of these things, so you can just build an expression that’s going to be working against the Arrow data, and then have a very good performance. As I mentioned, the other thing you can do is, because these representations are all columnar in nature, they’re actually designed specifically to be consistent with representations that the underlying CPU expects.If you want to do a SIMD operation, where you’re adding multiple values, here’s an example of a bunch of two-byte integers. I’ve got a selection of two-byte integers, A and B. This is a two-byte version instead of a four-byte version. I’m going to add them together. When I do that, I could do it where I read the first integer on each side and then add those together. Then I read the second integer on each side and add those two together, right? It’s going to be the number of reads plus the number of adds, and so it’s going to be two 3x the number of records.But the reality is that CPUs are better at this than that. We can instead take the CPU and say, “Hey, CPU, you know how to do 128 bit adds at one time, where these things are co-located inside of these chunks of memory, and do that all together.” All of the sudden, this becomes three instructions instead of 24 instructions. It’s 8x improvement in the number of instructions that we have to apply here to complete this operation.Now, it’s not something that you actually have to worry about once you’ve got something like Gandiva to solve the problem, but this is why Gandiva can go more quickly than other things. The core of this is that the underlying representation also lines up with this. If step one was “reformat my data into a data representation that the CPU could understand to do SIMD operations. Then if step two is to take advantage of the SIMD operation, you’re going to lose a lot of the benefits you could otherwise gain by using these operations. In this case, because the representation is consistent with what the CPU expects, we can improve performance substantially. How is Gandiva used? Well, the nice part about Gandiva is that it’s language-agnostic, so it has continued to achieve on the promise that Arrow has, which is we don’t care what language it is. Arrow has something like I think 10 or 12 different language bindings – all the common languages you might work with today. Gandiva has several of those already. I believe it has Java, C++, Python, and Rust.In all those cases, you build up the expression tree. The expression trees communicate with Protobuf because that’s a common format that works across different languages. Then you hand that to Gandiva to compile and use. In the context of Dremio, we actually run Dremio inside of the JVM. The JVM is notorious for not working well with other languages. Because of Arrow, we can actually work very well with Gandiva and make that work extremely efficiently. That’s Gandiva. That’s the first of the four topics that we’re going to cover.The second one is reading. Gandiva will make you go faster, whether you’re in the cloud or on-prem; it’s just about processing and making that go faster. One of the really interesting challenges in the cloud is that object stores are awesome, but they’re also slow. They’re way slower than if I’ve got a VM on my local machine.Let’s talk about the data representation of columnar data and how that maps to the performance that we can get by doing changes to how we’re doing the underlying reads. Okay? This is a quick review of how columnar data formats work. Imagine you have a table of data off here on the left, and you have these columns, I’ve got color-coded columns, so that you can see what’s going on with this data.The first thing that happens is, is that you will then generally take a subset of columns and put them into some kind of chunk, what might be called a row group or a stripe or something like that. I’ll use the Parquet language because I use Parquet the most. That’s called Parquet row groups.Basically, you say, “Okay, I’m going to take this subset of data, and I’m going to put those together so that all those rows are together, because I want that to be sort of a manageable size.” Size might be 128 megabytes, it might be one gigabyte, something like that.Once I have those rows of data together, I then coalesce the values for each column independently, right? I put all the oranges together, then all the yellows, then all the greens, then all the light blues, then all the dark blues, and then all the grays, right? You put those all together and that’s actually the format of that row group inside of the Parquet file. This is designed so that you can improve the performance of reading from disk.What happens is, if I only need orange and green, then I don’t have to read the yellow, and I don’t have to read the blues or the grays, right? I can substantially reduce my read on disk. Inside of one of those “Column Chunks” in Parquet is actually what are called pages, which are little compressed units that are the actual data itself. This is how, roughly speaking, all the common formats are composed. There are slight variations to all of these things, but this is basically how they all look.This is great. It works really well for a lot of things. But, if you start thinking about how it reads, it becomes a little bit problematic, right? Because if I want to read that data, and I want to turn it into a row-wise representation, which is how most processing systems work, or even a columnar representation, where I’m not trying to read all the data at once. I can start from the beginning of the file to the end the file. I read all the data and everything is fine in terms of reading, but I want to take advantage of the fact that I’m only going to read some of the columns. I don’t really want to read from start to finish. If I want an orange column and a green column, I can read from the start of the orange to the end of the orange and start the green to the end of the green, in that order.But that’s also pretty inefficient. The different columns inside that chunk are going to use substantially different amounts of data depending on how well they can be compressed. If I read all of the orange before I read any of the green, I may be using a substantial amount of memory holding the orange in memory, before I even get to reading the green.Depending on the operation, it may be that I’m wasting all of those reads and all of that memory. When you read from disk, there’s this thing called Readahead. it’s trying to figure out how we can go faster reading from disk. If you think about a read, if every time you asked for one byte of data from disk , and it would have to get the data of that one byte and read it off the disk and then hand it back to you, things would be ridiculously slow.At various layers of your IO, there’s basically buffers that say, “Hey, I’m going to read this much at a time.” Then there’s this Readahead concept, which is, “Hey, if he just asked for the first 4K of the file, then odds are he’s going to want the next 4K of the file.” That’s a simplified version of Readahead. But basically, a client’s going to say, “Hey, I want the first bites.”I’m using a little color coding here, which I’m going to continue to use. Color coding here is, “Hey, initially, this orange is – I got to read this in line. I’m waiting for this.” This first set of data – I’m going to have to wait for it, because I’m asking for it, and then I have to go and read from disk to get it.Now, most systems will say, “Hey, you just asked for the first f4K?” I might as well get the next 4K because odds are, you’re going to want that, too. This is to support pipelining, it because if you then ask for the next 4K, I already have that, so I can hand it back to you, and then ask you the next 4K, while you’re consuming that 4K.The goal being that the underlying IO is ahead of you, so that you’re not waiting for it. If I read the first 4K, the system says, “Okay, I’m going to 8K from disk.” Then when I had the second request, I get a green request, which means that when I asked for it, it was already ready for me, and therefore, I don’t have to wait for the disk again? This is critical to performance – it’s basically anticipating what is needed in order to improve the performance of what we’re going to get.Again, the Readahead is going to say, “Okay, you just asked for that 4K, so I better go get the next 4K.”? This works really well with files that are red sequential. But it doesn’t work as well when you look at something like a Parquet format. This columnar format, I’ve shifted that,so that’s along the top and down the left-hand side. I’ve got time and what I want to show you what it is what happens when a naive Readahead approach interacts with reads of these columnar formats.What happens is that most systems will start by reading the first column, and they’ll read a subset of the first column in order to get some of the data. They don’t want to read the whole of orange into memory. They’re going to read only the first little bit there and say, “Well, I only want to have 5000 bytes, and I want to send 5000 bytes down my pipeline before I get the next 5000 records.” Those 5000 records are down the pipeline because there are other operations, like if I’m doing a SQL, I might be doing read from disk, do a join, then do an aggregation, and do some other operation like a filter or something like that.I want to get the data through that pipeline as quickly as possible, and so I don’t want to read all of orange before I start returning data downstream. I make that first request, that orange request, which is, “Hey, I’m going to read this, and I’m going to wait for the read.” At the same time, the Readahead is going to say, “Okay, let me go and read the next 4K.” Right? But this is where it breaks down.At time two, I’m now going to say, “Okay, well I want the yellow cone.” Readahead is probably going to say, “Oh shoot.” This depends on the ticket Readahead. There are more advanced Readaheads that are better than this, but for illustration purposes, this is basically what happens. “Oh, shoot – that Readahead I didn’t need that. Let me throw that away” And that’s the red. I’m throwing away the data I just read, because it turns out that this person seek to another place at the file and is now reading at that location.

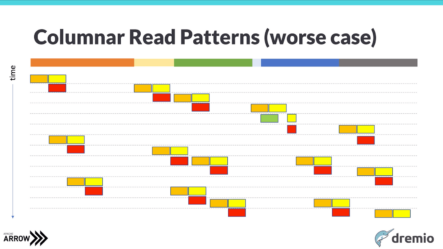

In this case, because the representation is consistent with what the CPU expects, we can improve performance substantially. How is Gandiva used? Well, the nice part about Gandiva is that it’s language-agnostic, so it has continued to achieve on the promise that Arrow has, which is we don’t care what language it is. Arrow has something like I think 10 or 12 different language bindings – all the common languages you might work with today. Gandiva has several of those already. I believe it has Java, C++, Python, and Rust.In all those cases, you build up the expression tree. The expression trees communicate with Protobuf because that’s a common format that works across different languages. Then you hand that to Gandiva to compile and use. In the context of Dremio, we actually run Dremio inside of the JVM. The JVM is notorious for not working well with other languages. Because of Arrow, we can actually work very well with Gandiva and make that work extremely efficiently. That’s Gandiva. That’s the first of the four topics that we’re going to cover.The second one is reading. Gandiva will make you go faster, whether you’re in the cloud or on-prem; it’s just about processing and making that go faster. One of the really interesting challenges in the cloud is that object stores are awesome, but they’re also slow. They’re way slower than if I’ve got a VM on my local machine.Let’s talk about the data representation of columnar data and how that maps to the performance that we can get by doing changes to how we’re doing the underlying reads. Okay? This is a quick review of how columnar data formats work. Imagine you have a table of data off here on the left, and you have these columns, I’ve got color-coded columns, so that you can see what’s going on with this data.The first thing that happens is, is that you will then generally take a subset of columns and put them into some kind of chunk, what might be called a row group or a stripe or something like that. I’ll use the Parquet language because I use Parquet the most. That’s called Parquet row groups.Basically, you say, “Okay, I’m going to take this subset of data, and I’m going to put those together so that all those rows are together, because I want that to be sort of a manageable size.” Size might be 128 megabytes, it might be one gigabyte, something like that.Once I have those rows of data together, I then coalesce the values for each column independently, right? I put all the oranges together, then all the yellows, then all the greens, then all the light blues, then all the dark blues, and then all the grays, right? You put those all together and that’s actually the format of that row group inside of the Parquet file. This is designed so that you can improve the performance of reading from disk.What happens is, if I only need orange and green, then I don’t have to read the yellow, and I don’t have to read the blues or the grays, right? I can substantially reduce my read on disk. Inside of one of those “Column Chunks” in Parquet is actually what are called pages, which are little compressed units that are the actual data itself. This is how, roughly speaking, all the common formats are composed. There are slight variations to all of these things, but this is basically how they all look.This is great. It works really well for a lot of things. But, if you start thinking about how it reads, it becomes a little bit problematic, right? Because if I want to read that data, and I want to turn it into a row-wise representation, which is how most processing systems work, or even a columnar representation, where I’m not trying to read all the data at once. I can start from the beginning of the file to the end the file. I read all the data and everything is fine in terms of reading, but I want to take advantage of the fact that I’m only going to read some of the columns. I don’t really want to read from start to finish. If I want an orange column and a green column, I can read from the start of the orange to the end of the orange and start the green to the end of the green, in that order.But that’s also pretty inefficient. The different columns inside that chunk are going to use substantially different amounts of data depending on how well they can be compressed. If I read all of the orange before I read any of the green, I may be using a substantial amount of memory holding the orange in memory, before I even get to reading the green.Depending on the operation, it may be that I’m wasting all of those reads and all of that memory. When you read from disk, there’s this thing called Readahead. it’s trying to figure out how we can go faster reading from disk. If you think about a read, if every time you asked for one byte of data from disk , and it would have to get the data of that one byte and read it off the disk and then hand it back to you, things would be ridiculously slow.At various layers of your IO, there’s basically buffers that say, “Hey, I’m going to read this much at a time.” Then there’s this Readahead concept, which is, “Hey, if he just asked for the first 4K of the file, then odds are he’s going to want the next 4K of the file.” That’s a simplified version of Readahead. But basically, a client’s going to say, “Hey, I want the first bites.”I’m using a little color coding here, which I’m going to continue to use. Color coding here is, “Hey, initially, this orange is – I got to read this in line. I’m waiting for this.” This first set of data – I’m going to have to wait for it, because I’m asking for it, and then I have to go and read from disk to get it.Now, most systems will say, “Hey, you just asked for the first f4K?” I might as well get the next 4K because odds are, you’re going to want that, too. This is to support pipelining, it because if you then ask for the next 4K, I already have that, so I can hand it back to you, and then ask you the next 4K, while you’re consuming that 4K.The goal being that the underlying IO is ahead of you, so that you’re not waiting for it. If I read the first 4K, the system says, “Okay, I’m going to 8K from disk.” Then when I had the second request, I get a green request, which means that when I asked for it, it was already ready for me, and therefore, I don’t have to wait for the disk again? This is critical to performance – it’s basically anticipating what is needed in order to improve the performance of what we’re going to get.Again, the Readahead is going to say, “Okay, you just asked for that 4K, so I better go get the next 4K.”? This works really well with files that are red sequential. But it doesn’t work as well when you look at something like a Parquet format. This columnar format, I’ve shifted that,so that’s along the top and down the left-hand side. I’ve got time and what I want to show you what it is what happens when a naive Readahead approach interacts with reads of these columnar formats.What happens is that most systems will start by reading the first column, and they’ll read a subset of the first column in order to get some of the data. They don’t want to read the whole of orange into memory. They’re going to read only the first little bit there and say, “Well, I only want to have 5000 bytes, and I want to send 5000 bytes down my pipeline before I get the next 5000 records.” Those 5000 records are down the pipeline because there are other operations, like if I’m doing a SQL, I might be doing read from disk, do a join, then do an aggregation, and do some other operation like a filter or something like that.I want to get the data through that pipeline as quickly as possible, and so I don’t want to read all of orange before I start returning data downstream. I make that first request, that orange request, which is, “Hey, I’m going to read this, and I’m going to wait for the read.” At the same time, the Readahead is going to say, “Okay, let me go and read the next 4K.” Right? But this is where it breaks down.At time two, I’m now going to say, “Okay, well I want the yellow cone.” Readahead is probably going to say, “Oh shoot.” This depends on the ticket Readahead. There are more advanced Readaheads that are better than this, but for illustration purposes, this is basically what happens. “Oh, shoot – that Readahead I didn’t need that. Let me throw that away” And that’s the red. I’m throwing away the data I just read, because it turns out that this person seek to another place at the file and is now reading at that location. Now, I’m going to say, “Oh, okay. The read in this location, I’m going to do another read ahead at this location.” Right? Well, guess what happens at time three. I’m going to read someplace else. Because that’s the part of the file that I need now. Again, I’m going to throw things away. What happens is that this continues on, and in some cases, it might be okay, right? In this case, that one column is so narrow that it happens that the Readahead overlapped with what was being read next. Then all of a sudden, we could get a green block. But mostly what you’re seeing is waiting for data, and the yellows and the reds are basically pulling that data but then not using that data. This just goes on indefinitely. Now, realistically, most Readaheads, if they see that they’re constantly wasting the reads, they’ll actually turn themselves off. At some point, the yellows and the reds will just go away, and you’ll just have a bunch of oranges. The problem though is that each time you do one of those oranges, it means that I asked for the data, and then I have to wait for the data to come back from the underlying system. Now, this isn’t a huge problem historically on local disks, because local disks are pretty fast. I know that they’re not the fastest thing in the world, but generally speaking, you set the buffers at a reasonable size, they got a reasonable latency and things are okay. When you go into the cloud, the latency are one to two orders of magnitude higher to get data back. That means that all of a sudden, these oranges, where we wait and try to get the information back from the underlying system, start to make things way slower. So when you’re interacting with these systems, you’ll start to see substantially slower performance than if using local disks. This is actually what leads to many people actually implementing things like using EMRor using HDFS on Amazon to actually do interactive workloads, because they can’t get the performance from S3, and this is one of the contributors. The latency itself is a problem, the throughput is a problem, but the actual read pattern and how we’re reading these things is also a problem. Basically, systems need to consume all these columns, and we really want to pipeline things, but we’re basically waiting. We’re not keeping the pipeline populated, and that becomes very painful. One solution is you can read all the file at once. A second solution is where you can say, “Well, I’m going to read large chunks of the file at once.” Or you say, “Well, I’m only going to read two big chunks and then two file megs is not as bad, but I’ve still got a lot of wastage, and I still have to figure out, “How do I anticipate these things” The third option – and this is what we’ve actually found to be very, very valuable – is you merge the IO layer with an understanding of the format. You say, “The Readahead should be aware of how the file format works,” or else you’re not going to get to the performance that you want to get to. That’s what we did; we built what we call a predictive columnar, which is basically a predictive columnar reader. It’s s going to understand the commoner formats and read those decks. What happens is, when you first start, you actually go and request a bunch of stuff before you even start reading. We’re going to read the first little bit of all the columns because we know that odds are, this is the most common pattern that happens. Maybe you’re only reading through the columns, and you’ve only asked for three of the columns. What that means is that then, when the normal read pattern layers on top (which continues to happen, because that’s a natural way to decode one column at a time, because you’re decompressing this column, and you’re decompressing that column, and then the other column), is that you get greens, right? You actually maintain understanding to every column and the Readaheads for every column independently, so that you can continue to make sure that each of these things are populated. Every time I go to request something, I’m going to get that data, and I’m going to keep track of that as a Readahead that’s independent of the other Readaheads to improve performance. This comes down to where you actually always have greens. This means that if the application is reading some data, then decompressing the data and sending it downstream, then reading some data and aggressively sending it downstream, then basically all the requests are happening with processing and decompressing that data.

Now, I’m going to say, “Oh, okay. The read in this location, I’m going to do another read ahead at this location.” Right? Well, guess what happens at time three. I’m going to read someplace else. Because that’s the part of the file that I need now. Again, I’m going to throw things away. What happens is that this continues on, and in some cases, it might be okay, right? In this case, that one column is so narrow that it happens that the Readahead overlapped with what was being read next. Then all of a sudden, we could get a green block. But mostly what you’re seeing is waiting for data, and the yellows and the reds are basically pulling that data but then not using that data. This just goes on indefinitely. Now, realistically, most Readaheads, if they see that they’re constantly wasting the reads, they’ll actually turn themselves off. At some point, the yellows and the reds will just go away, and you’ll just have a bunch of oranges. The problem though is that each time you do one of those oranges, it means that I asked for the data, and then I have to wait for the data to come back from the underlying system. Now, this isn’t a huge problem historically on local disks, because local disks are pretty fast. I know that they’re not the fastest thing in the world, but generally speaking, you set the buffers at a reasonable size, they got a reasonable latency and things are okay. When you go into the cloud, the latency are one to two orders of magnitude higher to get data back. That means that all of a sudden, these oranges, where we wait and try to get the information back from the underlying system, start to make things way slower. So when you’re interacting with these systems, you’ll start to see substantially slower performance than if using local disks. This is actually what leads to many people actually implementing things like using EMRor using HDFS on Amazon to actually do interactive workloads, because they can’t get the performance from S3, and this is one of the contributors. The latency itself is a problem, the throughput is a problem, but the actual read pattern and how we’re reading these things is also a problem. Basically, systems need to consume all these columns, and we really want to pipeline things, but we’re basically waiting. We’re not keeping the pipeline populated, and that becomes very painful. One solution is you can read all the file at once. A second solution is where you can say, “Well, I’m going to read large chunks of the file at once.” Or you say, “Well, I’m only going to read two big chunks and then two file megs is not as bad, but I’ve still got a lot of wastage, and I still have to figure out, “How do I anticipate these things” The third option – and this is what we’ve actually found to be very, very valuable – is you merge the IO layer with an understanding of the format. You say, “The Readahead should be aware of how the file format works,” or else you’re not going to get to the performance that you want to get to. That’s what we did; we built what we call a predictive columnar, which is basically a predictive columnar reader. It’s s going to understand the commoner formats and read those decks. What happens is, when you first start, you actually go and request a bunch of stuff before you even start reading. We’re going to read the first little bit of all the columns because we know that odds are, this is the most common pattern that happens. Maybe you’re only reading through the columns, and you’ve only asked for three of the columns. What that means is that then, when the normal read pattern layers on top (which continues to happen, because that’s a natural way to decode one column at a time, because you’re decompressing this column, and you’re decompressing that column, and then the other column), is that you get greens, right? You actually maintain understanding to every column and the Readaheads for every column independently, so that you can continue to make sure that each of these things are populated. Every time I go to request something, I’m going to get that data, and I’m going to keep track of that as a Readahead that’s independent of the other Readaheads to improve performance. This comes down to where you actually always have greens. This means that if the application is reading some data, then decompressing the data and sending it downstream, then reading some data and aggressively sending it downstream, then basically all the requests are happening with processing and decompressing that data. So the next time it’s going to read some more data, that data is going to already be there. That’s really what you want to have. To give you an example of the impact that this has, we ran some example queries on Azure Data Lake Storage Gen2. This was the performance improvement of this example workload in terms of just how much faster you can get by applying this technique. I’m going to jump to Arrow Flight and how that can make things faster, too. I’m realizing that I’m using more time than I should, so I’m going to go a little bit quicker here. Arrow Flight is a high-performance protocol for moving Arrow data between two different systems. Basically, it sends streams; you have clients and servers, but realistically, a stream can go in either direction. I can be pushing it stream up to my server, or I can be consuming it from my server. Now, because Arrow flights focus on analytical data sets, it’s very important to support parallelization. So if you have 100 hundred servers that are talking to 100 servers, you can actually send a stream of Arrow streams between all those 100 servers. It’s not a single stream concept like with ODBC and JDBC, which is one of the things that you can think about replacing a little bit. There’s a lot more to ODBC and JDBC than this. But for the purposes of analytical receipt of data, this is a good analog. So that’s single stream. Here we’re saying, “Hey, if I want to 100 streams to 100 streams, I can do that. If I want 1,000 streams to 1,000 streams, I can do that.” So we can move data very, very efficiently, and it’s all moved in this Arrow format.

So the next time it’s going to read some more data, that data is going to already be there. That’s really what you want to have. To give you an example of the impact that this has, we ran some example queries on Azure Data Lake Storage Gen2. This was the performance improvement of this example workload in terms of just how much faster you can get by applying this technique. I’m going to jump to Arrow Flight and how that can make things faster, too. I’m realizing that I’m using more time than I should, so I’m going to go a little bit quicker here. Arrow Flight is a high-performance protocol for moving Arrow data between two different systems. Basically, it sends streams; you have clients and servers, but realistically, a stream can go in either direction. I can be pushing it stream up to my server, or I can be consuming it from my server. Now, because Arrow flights focus on analytical data sets, it’s very important to support parallelization. So if you have 100 hundred servers that are talking to 100 servers, you can actually send a stream of Arrow streams between all those 100 servers. It’s not a single stream concept like with ODBC and JDBC, which is one of the things that you can think about replacing a little bit. There’s a lot more to ODBC and JDBC than this. But for the purposes of analytical receipt of data, this is a good analog. So that’s single stream. Here we’re saying, “Hey, if I want to 100 streams to 100 streams, I can do that. If I want 1,000 streams to 1,000 streams, I can do that.” So we can move data very, very efficiently, and it’s all moved in this Arrow format. I’m going to show you a quick example of this. What I have here is just a simple little Jupyter notebook that hopefully will run for me. What I’m going to do is I run a SQL query. This is running against Dremio. I just have a single node on EC2 in this case. So I run a SQL query here, where I’m just getting 2000 records back, because Arrow Flight is about transport, not about processing. So how do I efficiently get stuff back to the client? The client could be Python, it could be a BI tool, it could be something else entirely. Arrow Flight doesn’t really care. I’m using ODBC and Python here as examples. But basically, if you run a small query, it doesn’t really matter what the format is of the transport. It’s pretty fast either way, right? We’re at what point? .06 seconds versus .05 seconds. Flight is slightly faster, but they’re basically in the same ballpark. I’m going to go get five million records now. Let’s see if I can run this thing. Five million records is actually not that many records. I am constantly shocked by how slow this goes. So Dremio is sitting here, and it’s reading some data. There’s a time forhow much time it takes to read the data from its underlying storage. It’s then converting that into our presentation, and sending it over the network to the client that’s running inside of Python. The Python client is then going through that interface. If you look at the ODBC interface, it’s saying, “Give me the next record, okay?” Ask for the cell, ask for the cell, ask for the cell, right? That’s actually what’s happening inside of the pyodbc client; it’s basically reading every cell out of every record. I think that we’re reading something like 10 columns here. You’re talking about 15 million invocations and method calls to get this data back into Python. Right now, I’m not even converting it into a data frame or something else. I’m getting this back into the native representation, which is the pyodbc representation. It took 58 seconds, which is a long time to stand up here and talk and wait for it, and hope that it finishes. I’m going to run the same exact thing with Flight. The exact same query against the exact same system, same locations. The goal is faster. As you can see, it’s way faster, drastically faster. The reason is pretty simple; if you think about that first scenario, every single one of those invocations of all that code that had to be run doesn’t have to be run in the second case, because guess what? Arrow representation in one language is the same as Arrow representation in another language. Even though Dremio was running a JVM over here, it could communicate this Arrow Flight data back and its memory representation. The other side is like, “Hey, I know exactly how to consume it, the Arrow representation, and start working with it.” What this allows you to do, and this one I’m not going to run, because it takes about three minutes, is it allows you to load a billion records into a data frame in about three minutes. O Now, what would this have taken if I had done it with pyodbc, which I would honestly have never done, is about two and a half hours. The goal is that by using these techniques, all of a sudden, things that were not achievable before or not reasonable for people to wait for, you can now actually achieve them with reasonable effort. Now, if you know Python well, you may know that there’s actually something faster than pyodbc, and so I actually have another notebook here, which is turbodbc; it’s basically faster pyodbc. It’s actually also Arrow-native, so it knows how to do Arrow well and so that’s one of the things it does. I won’t run it for you. But that takes about 23 seconds for that exact same workload that was taking 58 seconds on pyodbc. Optimizing within Python, always a good place to start. Because frankly, a lot of times, there can be a bunch of optimizations that can done just there. But even with the fastest implementation of how you get data into a Python frame from a database today, with turbodbc, you’re still looking at 23, 24 seconds. That’s the kind of difference that you can have by using Arrow to solve data access. Lastly, let me just talk briefly about what we call the cloud columnar cache, or C3 for short, because it’s kind of a mouthful. This is talking about storage in the cloud. When you think about storage in the cloud, you don’t have a great option. You have some really great things, but they are only great at some things. You’ve got cloud storage, whether it’s S3 or ADLS or Azure storage, or blob storage, or whatever you have.. Unlimited scale, super cheap, and no management burden. Of course, you can spend a lot of money, but you didn’t notice that you had a bunch of data sitting there because it’s unlimited scale, but by and large it’s the best of the infrastructure-as-a-service. Now, it’s slow, though. Hopefully, most of you have seen this. It’s not super slow. It’s getting better all the time, but it’s way slower than if I’ve got a lovely HASH drive, especially if we’ve got a locally attached high-performance drive like NVME. It actually can be relatively expensive for hot data. If you’re querying the same thing over and over again from S3, you start to realize that the storage costs are not the biggest cost for you. It’s actually the access costs. That’s something else that you have to really think about. Then you’ve got this other option, which is locally attached storage, like locally attached SSDs. You can just do that sort of with basic s techniques. Or you could say, “Let me apply at HDFS or something like that on top of that to sort of have more layers on top of that.” Right? This actually allows you to get substantially better performance and it gets rid of the costs of doing those accesses, and S3, and whatnot. The downside, though, is that it is also a huge maintenance and management burden. It’s the reason that we went to infrastructure services to get rid of all this bullshit. But if you’re thinking about applications, yes, cloud storage is great for long- term storage and for analytical data sets where you don’t want to interact with performance. But if you want low latency and have critical applications, probably go with local SSD. Well, that sucks because you don’t want to have to make a choice. What we did with Dremio is we said, “Hey, let’s do something which combines the two of these things.” So it leverages the elasticity for cloud storage; that’s where you put your data. But then, let’s actually build a high-performance local caching layer for ephemeral storage, for local storage. We can do this across all workloads because the cost to implement this and use this is less than 10% for any missed read. The cost of actually caching locally is low enough that it actually can work really, really well. So we’re not caching files. So caching files is kind of a problem, because if you think about that previous picture, when we talked about Parquet, I may only read three of the columns of its file. If I cache the whole file, I’m wasting a huge amount of local storage. In fact, inside of a file in one of those column chunks, I may not read all of the column chunks. I might not see all the pages in that column chunk. The reason is that frequently, you apply common predicates; only some of the pages and some of those columns are actually what I want to store. Dremio C3 is basically allowing you to store sub chunks of sub files of sub columns locally to improve their performance. I call this screen door caching. What happens over time is that you see different subsets of the file being read, and then you can start to bring those into cache. So the greens here are reading into cache, the grays are reading from cache. Green means user access, gray means that it’s still in the cache. Over time, what will happen is that you’ll see some things get accessed a lot, and they’ll still stay in the cache. Other things, maybe only get accessed once, so they’ll very quickly come into and out of cache again. There’s no requirement of management; you simply check a box, and then all of a sudden, we’re using that local storage to make things much, much faster. How does it work? Well, so Dremio has these things called executors. These are the things that do data lifting, and each executor works independently. Each executor has this local cache, which is a combination of using RocksDB to maintain what’s called a page translation table, along with file system storage directly for the blobs of chunks or chunks of data. Each executor works independently, so there’s no scaling limits here. We have customers where we’re starting to deploy this who are running 10,000 cores and 200 terabytes of Dremio clusters to run with this. It can run at a massive scale and prepare parallelization. Then, basically, it does a read through and write through cache, but it’s also designed so that if nodes are coming in and out of the cluster, the system survives and does a good job of accommodating where that data should be afterwards. If you lose a node, that’s okay. That’s not the end of the world; it will continue to be fast and will actually reposition this stuff in an effective manner.What does the performance look like when you do this? Well, one of the key things that we figured out was basically doing what are called generational operations. Normally in caching layers, there’s a lot of locking and concurrency problems. What we did is basically we have these generations that are concurrently happening. Basically, operations are happening within one generation and that allows us to drastically reduce the contention, which allows us to do over 50,000 page translations a second, which is more than enough for most sizes of nodes that exist today. We can do five gigabytes of readsa second with this, against the standard hardware inside of an Amazon or something like that. As I mentioned, cache miss overhead is very, very low, and it supports a gigabyte a second of churn. What that means is that identifying new data that needs to be read that should be replacing old data that already exists inside the cache. So it can very quickly churn out things that are actually reads that we’re not doing commonly. Then there’s also the memory footprint of the cache itself. We use the disk and not the memory. A lot of people use memory to do caching. But the problem is that memory is a scarce resource, and doing it for cache that may or may not be used is very expensive. What we do instead is, we basically have a very small footprint of memory that we need to basically cover a huge amount of on-disk cache. If we look at most of the largest machines with NVME direct attached storage, you’re not going to go much above 16 terabytes today, and 16 terabytes requires less than one gigabyte of memory in order to cover the information. The other thing that we have is incomplete LRU, and what I mean by this is that an LRU tries to maintain an understanding of the entirety of the data set to try to make most LRUs try to understand every single value in the data set. The problem there is huge amounts of pressure on your memory in order to maintain all that data for every single translation or every single object that you’re matching. We have what we call an incomplete LRU, which is keeping statistics at hierarchical levels to improve the performance of the LRU without having to maintain everything at once. How do these things all work together? Let’s say you have a use case where you’re trying to access with Python, some Azure Storage? The initial approach is simple. I’m just going to use Python to access Azure storage. That’s fine, right? But the reality is that that’s not going to probably be fast enough for you, unless it’s a really small data set. You can just scale out things and Dremio is one way you can scale things out. There’s other things you could put here to scale things out, but Dremio is one example of that. That allows you to then scale up how many things are processing, because the odds are the data set that you want isn’t exactly this, isn’t that Azure storage. There’s some set of processing that must happen before you get to the data that you want, such as filtering things out, reorganizing things, cleansing things, and other things like that. But what really happens is that all of these different techniques come together. I actually didn’t talk about relational caching because I didn’t have enough time, but you can basically put this stuff in Azure storage, and use the predictive reader to read the stuff quickly into Dremio. Dremio can then use local cache to accelerate the access to some of that data, that’s just hot data. You then process that via Gandiva, and then move it back via transport with Arrow Flight back to the user. You can combine all these things together and get a substantial lift, depending on your situation. The amount that you can rise is substantial. Of course, you can add all these things together and say, “40,000 X improvement!” But that’s not really true, because it depends on where your time is. If most of your time is not in transport, and they can transport 20 times faster, that doesn’t actually help you that much, right? It’s about which techniques actually have an impact in your situation. That’s what I got. Let me know your thoughts. I’ve got 30 seconds for questions, although I think there’s a break now. If people want to ask questions beyond that, I think that’s probably okay, or people can come out. Thank you. Speaker 2:On premise use cases, how long does Gandiva use? Jacques:Yeah, so the question was, with on-premise use cases, how many things are still valuable? Okay, so that’s a good question. If you think about it, Gandiva is as impactful on-premise as in the cloud. Arrow Flight is just as impactful on- premise as in the cloud, the IO pattern is more impactful if you’re working in a situation where you’ve got remote clusters. For Dremio, we have many customers that have multiple clusters. In that situation, some of the clusters are going to be more distant, and so taking advantage of the way that we’re reading can be hugely advantageous, as well as the columnar cache. Those two are really more about whether or not there’s enough distance between the data and where you’re at. if you’ve got low latency/local storage, then those two things probably will have less impact. Other questions? Thanks, everybody.